Fitting

|

Applied Machine Learning with R The R Bootcamp @ AMLD |

|

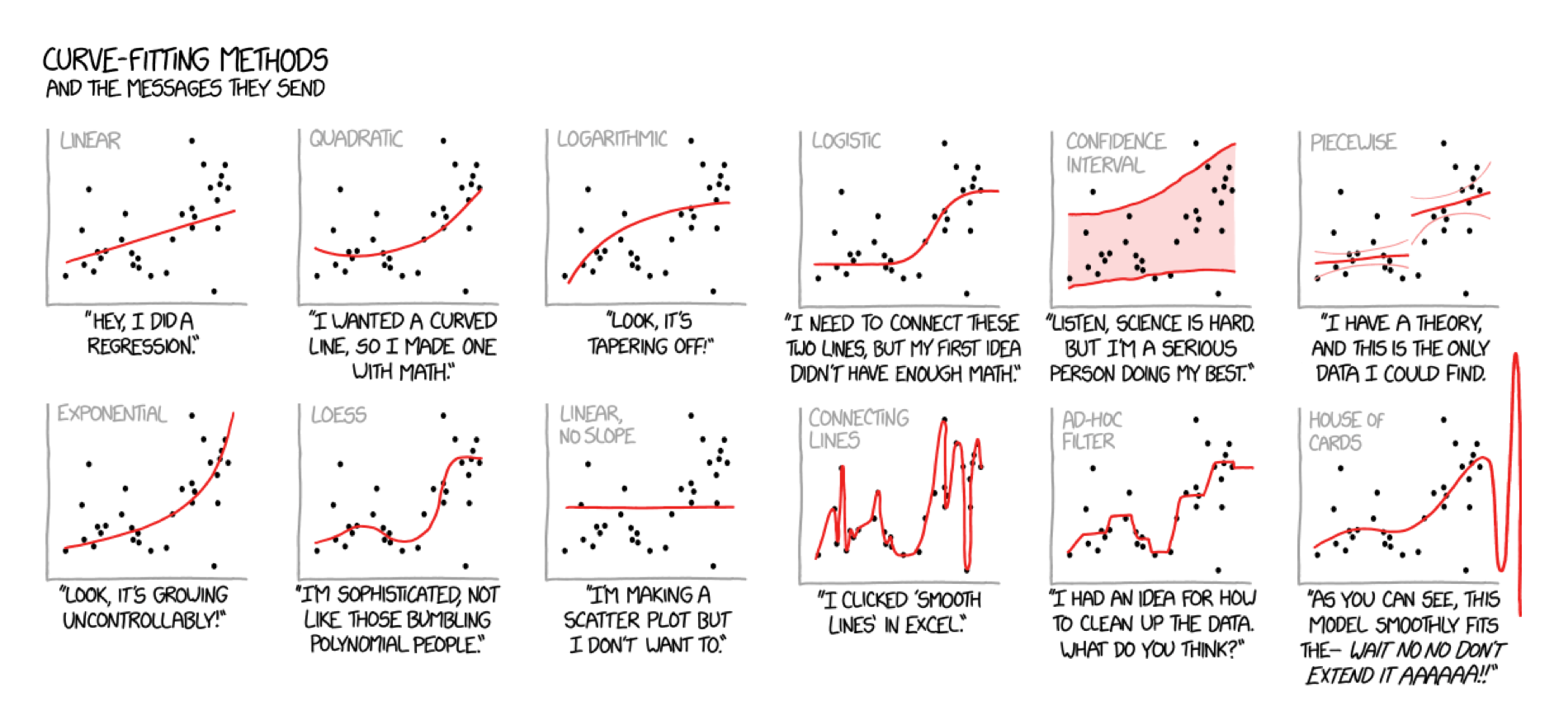

adapted from xkcd.com

Overview

In this practical, you’ll practice the basics of fitting and exploring regression models in R using the caret package.

By the end of this practical you will know how to:

- Fit a regression model to training data.

- Explore your fit object with generic functions.

- Evaluate the model’s fitting performance using accuracy measures such as MSE and MAE.

- Explore the effects of adding additional features.

Tasks

A - Setup

Open your

RBootcamp_AMLD2020R project. It should already have the folders1_Dataand2_Code. Make sure that the data file(s) listed in theDatasetssection are in your1_DatafolderOpen a new R script and save it as a new file called

Fitting_practical.Rin the2_Codefolder.Using

library()load the set of packages for this practical listed in the packages section above.

# Load packages necessary for this script

library(tidyverse)

library(caret)- For this practical, we’ll use a dataset of 388 U.S. Colleges. The data is stored in

college_train.csv. Using the following template, load the dataset into R ascollege_train:

# Load in college_train.csv data as college_train

college_train <- read_csv(file = "1_Data/college_train.csv")Take a look at the first few rows of the dataset by printing it to the console.

Print the numbers of rows and columns using the

dim()function.

# Print number of rows and columns of college_train

dim(XXX)- Open the dataset in a new window using

View(). How does it look?

View(XXX)- Before starting to model the data, we need to do a little bit of data cleaning. Specifically, we need to convert all character columns to factors: Do this by running the following code:

# Convert character to factor

college_train <- college_train %>%

mutate_if(is.character, factor)B - Determine sampling procedure

In caret, we define the computational nuances of training using the trainControl() function. Because we’re learning the basics of fitting, we’ll set method = "none" for now. (Note that you would almost never do this for a real prediction task, you’ll see why later!)

# Set training resampling method to "none"

ctrl_none <- trainControl(method = "none") C - Fit a regression model

- Using the code below, fit a regression model predicting graduation rate (

Grad.Rate) as a function of one feature:PhD(percent of faculty with PhDs). Save the result as an objectGrad.Rate_glm. Specifically,…

- set the

formargument toGrad.Rate ~ PhD. - set the

dataargument to your training datacollege_train. - set the

methodargument to"glm"for regression. - set the

trControlargument toctrl_none, the object you created previously.

# Grad.Rate_glm: Regression Model

Grad.Rate_glm <- train(form = XX ~ XX,

data = XX,

method = "XX",

trControl = XX)- Explore the fitted model using the

summary().

# Show summary information from the regression model

summary(XXX)- Look at the results, how do you interpret the regression coefficients? What is the general relationship between PhD and graduation rates? Does this make sense?

D - Evaluate accuracy

- Now it’s time to evaluate the model’s fitted values! Use the following code to save the fitted values as

glm_fitted.

# Get fitted values from the Grad.Rate_glm model and save as glm_fitted

glm_fitted <- predict(XXX)- Plot the distribution of your

glm_fittedobject using the code below - what are these values? Do they look reasonable? That is, are they in the range of what you expect the criterion to be?

# Plot glm_fitted

hist(XXX)- Now it’s time to compare your model fits to the true values. We’ll start by defining the vector

criterionas the actual graduation rates.

# Define criterion as Grad.Rate

criterion <- college_train$Grad.Rate- Let’s quantify our model’s fitting results. To do this, we’ll use the

postResample()function, with the fitted values as the prediction, and the criterion as the observed values.

Specifically,

- set the

predargument toglm_fitted(your fitted values). - set the

obsargument tocriterion(a vector of the criterion values).

# Regression Fitting Accuracy

postResample(pred = XXX, # Fitted values

obs = XXX) # criterion valuesYou’ll see three values here. The easiest to understand is MAE which stands for “Mean Absolute Error” – in other words, “on average how far are the predictions from the true values?” A value of 0 means perfect prediction, so small values are good! How do you interpret these results?

- Now we’re ready to do some plotting. But first, we need to re-organise the data a bit. Use the code below to create two dataframes:

accuracy- Raw absolute errorsaccuracy_agg- Aggregate (i.e.; mean) absolute errors

# accuracy - a dataframe of raw absolute errors

accuracy <- tibble(criterion = criterion,

Regression = glm_fitted) %>%

gather(model, prediction, -criterion) %>%

# Add error measures

mutate(ae = abs(prediction - criterion))

# accuracy_agg - Dataframe of aggregate errors

accuracy_agg <- accuracy %>%

group_by(model) %>%

summarise(mae = mean(ae)) # Calculate MAE (mean absolute error)- Print the

accuracyandaccuracy_aggobjects to see how they look.

accuracy

accuracy_agg- Using the code below, create a scatterplot showing the relationship between the true criterion values and the model fits.

# Plot A) Scatterplot of criterion versus predictions

ggplot(data = accuracy,

aes(x = criterion, y = prediction)) +

geom_point(alpha = .2) +

geom_abline(slope = 1, intercept = 0) +

labs(title = "Regression: One Feature",

subtitle = "Line indicates perfect performance",

x = "True Graduation Rates",

y = "Fitted Graduation Rates") +

xlim(0, 120) +

ylim(0, 120)Look at the plot, how do you interpret this? Do you think the model did well or not in fitting the graduation rates?

Let’s create a new violin plot showing the distribution of absolute errors of the model.

# Plot B) Violin plot of absolute errors

ggplot(data = accuracy,

aes(x = model, y = ae, fill = model)) +

geom_violin() +

geom_jitter(width = .05, alpha = .2) +

labs(title = "Distributions of Fitting Absolute Errors",

subtitle = "Numbers indicate means",

x = "Model",

y = "Absolute Error") +

guides(fill = FALSE) +

annotate(geom = "label",

x = accuracy_agg$model,

y = accuracy_agg$mae,

label = round(accuracy_agg$mae, 2))- What does the plot show you about the model fits? On average, how far away were the model fits from the true values?

E - Add more features

So far we have only used one feature (PhD), to predict Grad.Rate. Let’s try again, but now we’ll use a total of four features:

PhD- the percent of faculty with a PhD.Room.Board- room and board costs.Terminal- percent of faculty with a terminal degree.S.F.Ratio- student to faculty ratio.

- Using the same steps as above, create a regression model

Grad.Rate_glmwhich predictsGrad.Rateusing all 4 features (you can also call it something else if you want to save your original model!). Specifically,…

- set the

formargument toGrad.Rate ~ PhD + Room.Board + Terminal + S.F.Ratio. - set the

dataargument to your training datacollege_train. - set the

methodargument to"glm"for regression. - set the

trControlargument toctrl_none.

# Grad.Rate_glm: Regression Model

Grad.Rate_glm <- train(form = XXX ~ XXX + XXX + XXX + XXX,

data = XXX,

method = "XXX",

trControl = XXX)- Explore your model using

summary(). Which features seem to be important? Tip: set the first argument toGrad.Rate_glm.

summary(XXX)- Save the model’s fitted values as a new object

glm_fitted. I.e., set the first argument ofpredict()to yourGrad.Rate_glmmodel.

# Save new model fits

glm_fitted <- predict(XXX)- By comparing the model fits to the true criterion values using

postResample()calculate the Mean Absolute Error (MAE) of your new model that uses 4 features. How does this compare to your previous model that only used 1 feature? Specifically,…

- set the

predargument toglm_fitted, your model fits. - set the

obsargument tocriterion, a vector of the true criterion values.

# New model fitting accuracy

postResample(pred = XXX, # Fitted values

obs = XXX) # criterion values(Optional). Create a scatter plot showing the relationship between your new model fits and the true values. How does this plot compare to your previous one?

(Optional). Create a violin plot showing the distribution of absolute errors. How does this compare to your previous one?

F - Use all features

Alright, now it’s time to use all features available!

- Using the same steps as above, create a regression model

Grad.Rate_glmwhich predictsGrad.Rateusing all features in the dataset. Specifically,…

- set the

formargument toGrad.Rate ~ .. - set the

dataargument to the training datacollege_train. - set the

methodargument to"glm"for regression. - set the

trControlargument toctrl_none.

Grad.Rate_glm <- train(form = XXX ~ .,

data = XXX,

method = "glm",

trControl = XXX)- Explore your model using

summary(), which features seem to be important?

summary(XXX)- Save the model’s fitted values as a new object

glm_fitted.

# Save new model fits

glm_fitted <- predict(XXX)What is the Mean Absolute Error (MAE) of your new model that uses 17 features? How does this compare to your previous model that only used 1 feature?

(Optional). Create a scatter plot showing the relationship between your new model fits and the true values. How does this plot compare to your previous one?

(Optional). Create a violin plot showing the distribution of absolute errors. How does this compare to your previous one?

Classification

G - Make sure your criterion is a factor!

Now it’s time to do a classification task! Recall that in a classification task, we are predicting a category, not a continuous number. In this task, we’ll predict whether or not a college is Private or Public, this is stored as the variable college_train$Private.

- In order to do classification training with

caret, all you need to do is make sure that the criterion is coded as a factor. To test whether it is coded as a factor, you can look at itsclassas follows.

# Look at the class of the variable Private, should be a factor!

class(college_train$Private)- Now, we’ll save the Private column as a new object called

criterion.

# Define criterion as college_train$Private

criterion <- college_train$PrivateH - Fit a classification model

- Using

train(), createPrivate_glm, a regression model predicting the variablePrivate. Specifically,…

- set the

formargument toPrivate ~ .. - set the

dataargument to the training datacollege_train. - set the

methodargument to"glm". - set the

trControlargument toctrl_none.

# Fit regression model predicting Private

Private_glm <- train(form = XXX ~ .,

data = XXX,

method = "XXX",

trControl = XXX)- Explore the

Private_glmobject using thesummary()function.

# Explore the Private_glm object

summary(XXX)- Look at the results, how do you interpret the regression coefficients? Which features seem important in predicting whether a school is private or not?

I - Access classification model accuracy

- Now it’s time to save the model’s fitted values! Do this by running the following code to save the fitted values as

glm_fitted.

# Get fitted values from the Private_glm object

glm_fitted <- predict(XXX)Plot the values of your

glm_fittedobject - what are these values? Do they look reasonable?Now it’s time to calculate model accuracy. To do this, we will use a new function called

confusionMatrix(). This function compares model predictions to areference(in our case, the criterion, and returns several summary statistics). In the code below, we’ll useglm_fittedas the model predictions, and our already definedcriterionvector as the reference (aka, truth). Specifically,…

- set the

dataargument to yourglm_fittedvalues. - set the

referenceargument to thecriterionvalues.

# Show accuracy of glm_fitted versus the true criterion values

confusionMatrix(data = XXX, # This is the prediction!

reference = XXX) # This is the truth!Look at the results, what is the overall accuracy of the model? How do you interpret this?

To visualize the accuracy of your classification models, use the following code to create a bar plot.

# Get overall accuracy from regression model

glm_accuracy <- confusionMatrix(data = glm_fitted,

reference = criterion)$overall[1]

# Combine results into one table

accuracy <- tibble(Regression = glm_accuracy) %>%

gather(model, accuracy)

# Plot the results!

ggplot(accuracy, aes(x = model, y = accuracy, fill = model)) +

geom_bar(stat = "identity") +

labs(title = "Is a college private or public?",

subtitle = "Fitting classification accuracy",

y = "Overall Accuracy") +

ylim(c(0, 1)) +

annotate(geom = "label",

x = accuracy$model,

y = accuracy$accuracy,

label = round(accuracy$accuracy, 2))Examples

# Fitting and evaluating a regression model ------------------------------------

# Step 0: Load packages-----------

library(tidyverse) # Load tidyverse for dplyr and tidyr

library(caret) # For ML mastery

# Step 1: Load and Clean, and Explore Training data ----------------------

# I'll use the mpg dataset from the dplyr package in this example

# no need to load an external dataset

data_train <- read_csv("1_Data/mpg_train.csv")

# Convert all characters to factor

# Some ML models require factors

data_train <- data_train %>%

mutate_if(is.character, factor)

# Explore training data

data_train # Print the dataset

View(data_train) # Open in a new spreadsheet-like window

dim(data_train) # Print dimensions

names(data_train) # Print the names

# Step 2: Define training control parameters -------------

# In this case, I will set method = "none" to fit to

# the entire dataset without any fancy methods

# such as cross-validation

train_control <- trainControl(method = "none")

# Step 3: Train model: -----------------------------

# Criterion: hwy

# Features: year, cyl, displ, trans

# Regression

hwy_glm <- train(form = hwy ~ year + cyl + displ + trans,

data = data_train,

method = "glm",

trControl = train_control)

# Look at summary information

summary(hwy_glm)

# Step 4: Access fit ------------------------------

# Save fitted values

glm_fitted <- predict(hwy_glm)

# Define data_train$hwy as the true criterion

criterion <- data_train$hwy

# Regression Fitting Accuracy

postResample(pred = glm_fitted,

obs = criterion)

# RMSE Rsquared MAE

# 3.246182 0.678465 2.501346

# On average, the model fits are 2.8 away from the true

# criterion values

# Step 5: Visualise Accuracy -------------------------

# Tidy competition results

accuracy <- tibble(criterion = criterion,

Regression = glm_fitted) %>%

gather(model, prediction, -criterion) %>%

# Add error measures

mutate(se = prediction - criterion,

ae = abs(prediction - criterion))

# Calculate summaries

accuracy_agg <- accuracy %>%

group_by(model) %>%

summarise(mae = mean(ae)) # Calculate MAE (mean absolute error)

# Plot A) Scatterplot of criterion versus predictions

ggplot(data = accuracy,

aes(x = criterion, y = prediction, col = model)) +

geom_point(alpha = .2) +

geom_abline(slope = 1, intercept = 0) +

labs(title = "Predicting mpg$hwy",

subtitle = "Black line indicates perfect performance")

# Plot B) Violin plot of absolute errors

ggplot(data = accuracy,

aes(x = model, y = ae, fill = model)) +

geom_violin() +

geom_jitter(width = .05, alpha = .2) +

labs(title = "Distributions of Fitting Absolute Errors",

subtitle = "Numbers indicate means",

x = "Model",

y = "Absolute Error") +

guides(fill = FALSE) +

annotate(geom = "label",

x = accuracy_agg$model,

y = accuracy_agg$mae,

label = round(accuracy_agg$mae, 2))Datasets

| File | Rows | Columns |

|---|---|---|

| college_train.csv | 1000 | 21 |

- The

college_traindata are taken from theCollegedataset in theISLRpackage. They contain statistics for a large number of US Colleges from the 1995 issue of US News and World Report.

Variable description of college_train

| Name | Description |

|---|---|

Private |

A factor with levels No and Yes indicating private or public university. |

Apps |

Number of applications received. |

Accept |

Number of applications accepted. |

Enroll |

Number of new students enrolled. |

Top10perc |

Pct. new students from top 10% of H.S. class. |

Top25perc |

Pct. new students from top 25% of H.S. class. |

F.Undergrad |

Number of fulltime undergraduates. |

P.Undergrad |

Number of parttime undergraduates. |

Outstate |

Out-of-state tuition. |

Room.Board |

Room and board costs. |

Books |

Estimated book costs. |

Personal |

Estimated personal spending. |

PhD |

Pct. of faculty with Ph.D.’s. |

Terminal |

Pct. of faculty with terminal degree. |

S.F.Ratio |

Student/faculty ratio. |

perc.alumni |

Pct. alumni who donate. |

Expend |

Instructional expenditure per student. |

Grad.Rate |

Graduation rate. |

Functions

Packages

| Package | Installation |

|---|---|

tidyverse |

install.packages("tidyverse") |

caret |

install.packages("caret") |

Functions

| Function | Package | Description |

|---|---|---|

trainControl() |

caret |

Define modelling control parameters |

train() |

caret |

Train a model |

predict(object, newdata) |

base |

Predict the criterion values of newdata based on object |

postResample() |

caret |

Calculate aggregate model performance in regression tasks |

confusionMatrix() |

caret |

Calculate aggregate model performance in classification tasks |

Resources

Cheatsheet

from github.com/rstudio