Tuning

|

Applied Machine Learning with R The R Bootcamp @ AMLD |

|

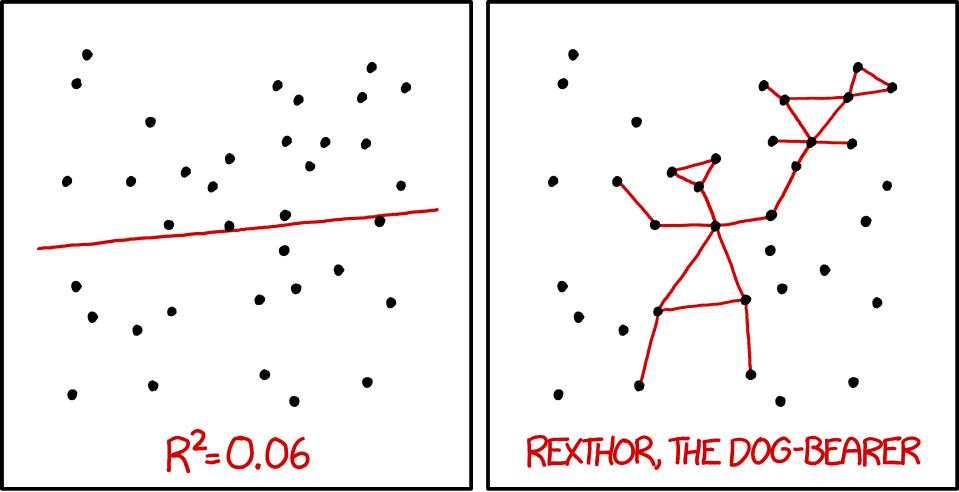

from xkcd.com

Overview

By the end of this practical you will know how to:

- Use cross-validation to select optimal model tuning parameters for decision trees and random forests.

- Compare ‘standard’ regression with lasso and ridge penalised regression.

- Use cross-validation to estimate future test accuracy.

Tasks

In this practical, we will predict the Salary of baseball players from the hitters_train and hitters_test datasets.

A - Setup

Open your

RBootcamp_AMLD2020R project. It should already have the folders1_Dataand2_Code. Make sure that the data file(s) listed in theDatasetssection are in your1_DatafolderOpen a new R script and save it as a new file called

Optimization_practical.Rin the2_Codefolder.Using

library()load the set of packages for this practical listed in the packages section above.

# Load packages necessary for this script

library(tidyverse)

library(caret)

library(party)

library(partykit)- Run the code below to load each of the datasets listed in the

Datasetssection as new objects.

# hitters data

hitters_train <- read_csv(file = "1_Data/hitters_train.csv")

hitters_test <- read_csv(file = "1_Data/hitters_test.csv")- Take a look at the first few rows of each dataframe by printing them to the console.

# Print dataframes to the console

hitters_train

hitters_test- Open each dataset in a new window using

View(). Do they look ok?

# Open each dataset in a window.

View(XXX)

View(XXX)- As always, we need to convert all character columns to factors before we start. Do this by running the following code.

B - Setup trainControl()

- Set up your training by specifying

ctrl_cvas 10-fold cross-validation. Specifically,…

- set

method = "cv"to specify cross validation. - set

number = 10to specify 10 folds.

# Use 10-fold cross validation

ctrl_cv <- trainControl(method = "XX",

number = XX) C - Regression (standard)

- Fit a (standard) regression model predicting

Salaryas a function of all features. Specifically,…

- set the formula to

Salary ~ .. - set the data to

hitters_train. - set the method to

"glm"for regular regression. - set the train control argument to

ctrl_cv.

# Normal Regression --------------------------

salary_glm <- train(form = XX ~ .,

data = XX,

method = "XX",

trControl = XX)Print your

salary_glm. What do you see?Print your final model object with

salary_glm$finalModel.

# Print final regression model

salary_glm$finalModel- Print your final regression model coefficients with

coef().

D - Ridge Regression

It’s time to fit an optimized regression model with a Ridge penalty!

- Before we can fit a ridge regression model, we need to specify which values of the lambda penalty parameter we want to try. Using the code below, create a vector called

lambda_vecwhich contains 100 values spanning a wide range, from very close to 0 to 1,000.

# Vector of lambda values to try

lambda_vec <- 10 ^ (seq(-4, 4, length = 100))- Fit a ridge regression model predicting

Salaryas a function of all features. Specifically,…

- set the formula to

Salary ~ .. - set the data to

hitters_train. - set the method to

"glmnet"for regularized regression. - set the train control argument to

ctrl_cv. - set the

preProcessargument toc("center", "scale")to make sure the variables are standarised when estimating the beta weights (this is good practice for ridge regression as otherwise the different scales of the variables impact the betas and thus the punishment would also depend on the scale). - set the tuneGrid argument such that alpha is 0 (for ridge regression), and with all lambda values you specified in

lambda_vec(we’ve done this for you below).

# Ridge Regression --------------------------

salary_ridge <- train(form = XX ~ .,

data = XX,

method = "XX",

trControl = XX,

preProcess = c("XX", "XX"), # Standardise

tuneGrid = expand.grid(alpha = 0, # Ridge penalty

lambda = lambda_vec))Print your

salary_ridgeobject. What do you see?Plot your

salary_ridgeobject. What do you see? Which value of the regularization parameter seems to be the best?

# Plot salary_ridge object

plot(XX)- Print the best value of lambda by running the following code. Does this match what you saw in the plot above?

# Print best regularisation parameter

salary_ridge$bestTune$lambda- What were your final regression model coefficients for the best lambda value? Find them by running the following code.

# Get coefficients from best lambda value

coef(salary_ridge$finalModel,

salary_ridge$bestTune$lambda)- How do these coefficients compare to what you found in regular regression? Are they similar? Different?

E - Lasso Regression

It’s time to fit an optimized regression model with a Lasso penalty!

- Before we can fit a lasso regression model, we again first specify which values of the lambda penalty parameter we want to try. Using the code below, create a vector called

lambda_vecwhich contains 100 values between 0 and 1,000.

# Determine possible values of lambda

lambda_vec <- 10 ^ seq(from = -4, to = 4, length = 100)- Fit a lasso regression model predicting

Salaryas a function of all features. Specifically,…

- set the formula to

Salary ~ .. - set the data to

hitters_train. - set the method to

"glmnet"for regularized regression. - set the train control argument to

ctrl_cv. - set the

preProcessargument toc("center", "scale")to make sure the variables are standarised when estimating the beta weights (this is also good practice for lasso regression). - set the tuneGrid argument such that alpha is 1 (for lasso regression), and with all lambda values you specified in

lambda_vec(we’ve done this for you below).

# Lasso Regression --------------------------

salary_lasso <- train(form = XX ~ .,

data = XX,

method = "XX",

trControl = XX,

preProcess = c("XX", "XX"), # Standardise

tuneGrid = expand.grid(alpha = 1, # Lasso penalty

lambda = lambda_vec))Print your

salary_lassoobject. What do you see?Plot your

salary_lassoobject. What do you see? Which value of the regularization parameter seems to be the best?

# Plot salary_lasso object

plot(XX)- Print the best value of lambda by running the following code. Does this match what you saw in the plot above?

# Print best regularisation parameter

salary_lasso$bestTune$lambda- What were your final regression model coefficients for the best lambda value? Find them by running the following code.

# Get coefficients from best lambda value

coef(salary_lasso$finalModel,

salary_lasso$bestTune$lambda)- How do these coefficients compare to what you found in regular regression? Are they similar? Different? Do you notice that any coefficients are now set to exactly 0?

F - Decision Tree

It’s time to fit an optimized decision tree model!

Before we can fit a decision tree, we need to specify which values of the complexity parameter

cpwe want to try. Using the code below, create a vector calledcp_vecwhich contains 100 values between 0 and 1.Fit a decision tree model predicting

Salaryas a function of all features. Specifically,…

- set the formula to

Salary ~ .. - set the data to

hitters_train. - set the method to

"rpart"for decision trees. - set the train control argument to

ctrl_cv, - set the tuneGrid argument with all

cpvalues you specified incp_vec.

# Decision Tree --------------------------

salary_rpart <- train(form = XX ~ .,

data = XX,

method = "XX",

trControl = XX,

tuneGrid = expand.grid(cp = cp_vec))Print your

salary_rpartobject. What do you see?Plot your

salary_rpartobject. What do you see? Which value of the complexity parameter seems to be the best?

# Plot salary_rpart object

plot(XX)- Print the best value of

cpby running the following code. Does this match what you saw in the plot above?

# Print best regularisation parameter

salary_rpart$bestTune$cp- Plot your final decision tree using the following code:

# Visualise your trees

plot(as.party(salary_rpart$finalModel)) - How do the nodes in the tree compare to the coefficients you found in your regression analyses? Do you see similarities or differences?

G - Random Forests

It’s time to fit an optimized random forest model!

Before we can fit a random forest model, we need to specify which values of the diversity parameter

mtrywe want to try. Using the code below, create a vector calledmtry_vecwhich is a sequence of numbers from 1 to 10.Fit a random forest model predicting

Salaryas a function of all features. Specifically,…

- set the formula to

Salary ~ .. - set the data to

hitters_train. - set the method to

"rf"for random forests. - set the train control argument to

ctrl_cv. - set the tuneGrid argument such that mtry can take on the values you defined in

mtry_vec.

# Random Forest --------------------------

salary_rf <- train(form = XX ~ .,

data = XX,

method = "XX",

trControl = XX,

tuneGrid = expand.grid(mtry = mtry_vec))Print your

salary_rfobject. What do you see?Plot your

salary_rfobject. What do you see? Which value of the regularization parameter seems to be the best?

# Plot salary_rf object

plot(XX)- Print the best value of

mtryby running the following code. Does this match what you saw in the plot above?

# Print best mtry parameter

salary_rf$bestTune$mtryH - Estimate prediction accuracy from training folds

- Using

resamples(), calculate the estimated prediction accuracy for each of your models. To do this, put your model objects in the named list (e.g.;glm = salary_glm, …). See below.

# Summarise accuracy statistics across folds

salary_resamples <- resamples(list(glm = XXX,

ridge = XXX,

lasso = XXX,

rpart = XXX,

rf = XXX))- Look at the summary of your

salary_resamplesobject withsummary(salary_resamples). What does this tell you? Which model do you expect to have the best prediction accuracy for the test data?

I - Calculate prediction accuracy

- Save the criterion value for the test data as a new vector called

criterion_test.

# Save salaries of players in test dataset as criterion_test

criterion_test <- XXX$XXX- Using

predict(), save the prediction of your regular regression modelsalary_glmfor thehitters_testdata as a new object calledglm_pred. Specifically,…

- set the first argument to

salary_glm. - set the newdata argument to

hitters_test.

# Save the glm predicted salaries of hitters_test

glm_pred <- predict(XXX, newdata = XXX)- Now do the same with your ridge, lasso, decision tree, and random forest models to get the objects

ridge_pred,lasso_pred,rpart_predandrf_pred.

ridge_pred <- predict(XXX, newdata = XXX)

lasso_pred <- predict(XXX, newdata = XXX)

rpart_pred <- predict(XXX, newdata = XXX)

rf_pred <- predict(XXX, newdata = XXX)- Using

postResample(), calculate the aggregate prediction accuracy for each model using the template below. Specifically,…

- set the pred argument to your model predictions (e.g.;

ridge_pred). - set the

obsargument to the true criterion valuescriterion_test).

# Calculate aggregate accuracy for a model

postResample(pred = XXX,

obs = XXX)Which of your models had the best performance in the true test data?

How close were your models’ true prediction error to the values you estimated in the previous section when you ran

resamples()?

Examples

# Model optimization with Regression

# Step 0: Load packages-----------

library(tidyverse) # Load tidyverse for dplyr and tidyr

library(caret) # For ML mastery

library(partykit) # For decision trees

library(party) # For decision trees

# Step 1: Load, clean, and explore data ----------------------

# training data

data_train <- read_csv("1_Data/diamonds_train.csv")

# test data

data_test <- read_csv("1_Data/diamonds_test.csv")

# Convert all characters to factor

# Some ML models require factors

data_train <- data_train %>%

mutate_if(is.character, factor)

data_test <- data_test %>%

mutate_if(is.character, factor)

# Explore training data

data_train # Print the dataset

View(data_train) # Open in a new spreadsheet-like window

dim(data_train) # Print dimensions

names(data_train) # Print the names

# Define criterion_train

# We'll use this later to evaluate model accuracy

criterion_train <- data_train$price

criterion_test <- data_test$price

# Step 2: Define training control parameters -------------

# Use 10-fold cross validation

ctrl_cv <- trainControl(method = "cv",

number = 10)

# Step 3: Train models: -----------------------------

# Criterion: hwy

# Features: year, cyl, displ

# Normal Regression --------------------------

price_glm <- train(form = price ~ carat + depth + table + x + y,

data = data_train,

method = "glm",

trControl = ctrl_cv)

# Print key results

price_glm

# RMSE Rsquared MAE

# 1506 0.86 921

# Coefficients

coef(price_glm$finalModel)

# (Intercept) carat depth table x y

# 21464.9 11040.4 -215.6 -94.2 -3681.6 2358.9

# Lasso --------------------------

# Vector of lambda values to try

lambda_vec <- 10 ^ seq(-3, 3, length = 100)

price_lasso <- train(form = price ~ carat + depth + table + x + y,

data = data_train,

method = "glmnet",

trControl = ctrl_cv,

preProcess = c("center", "scale"), # Standardise

tuneGrid = expand.grid(alpha = 1, # Lasso

lambda = lambda_vec))

# Print key results

price_lasso

# glmnet

#

# 5000 samples

# 5 predictor

#

# Pre-processing: centered (5), scaled (5)

# Resampling: Cross-Validated (10 fold)

# Summary of sample sizes: 4500, 4500, 4500, 4500, 4500, 4500, ...

# Resampling results across tuning parameters:

#

# lambda RMSE Rsquared MAE

# 1.00e-03 1509 0.858 918

# 1.15e-03 1509 0.858 918

# 1.32e-03 1509 0.858 918

# 1.52e-03 1509 0.858 918

# Plot regularisation parameter versus error

plot(price_lasso)

# Print best regularisation parameter

price_lasso$bestTune$lambda

# Get coefficients from best lambda value

coef(price_lasso$finalModel,

price_lasso$bestTune$lambda)

# 6 x 1 sparse Matrix of class "dgCMatrix"

# 1

# (Intercept) 4001

# carat 5179

# depth -300

# table -213

# x -3222

# y 1755

# Ridge --------------------------

# Vector of lambda values to try

lambda_vec <- 10 ^ seq(-3, 3, length = 100)

price_ridge <- train(form = price ~ carat + depth + table + x + y,

data = data_train,

method = "glmnet",

trControl = ctrl_cv,

preProcess = c("center", "scale"), # Standardise

tuneGrid = expand.grid(alpha = 0, # Ridge penalty

lambda = lambda_vec))

# Print key results

price_ridge

# glmnet

#

# 5000 samples

# 5 predictor

#

# Pre-processing: centered (5), scaled (5)

# Resampling: Cross-Validated (10 fold)

# Summary of sample sizes: 4500, 4500, 4500, 4500, 4500, 4500, ...

# Resampling results across tuning parameters:

#

# lambda RMSE Rsquared MAE

# 1.00e-03 1638 0.835 1137

# 1.15e-03 1638 0.835 1137

# 1.32e-03 1638 0.835 1137

# 1.52e-03 1638 0.835 1137

# 1.75e-03 1638 0.835 1137

# Plot regularisation parameter versus error

plot(price_ridge)

# Print best regularisation parameter

price_ridge$bestTune$lambda

# Get coefficients from best lambda value

coef(price_ridge$finalModel,

price_ridge$bestTune$lambda)

# 6 x 1 sparse Matrix of class "dgCMatrix"

# 1

# (Intercept) 4001

# carat 2059

# depth -131

# table -168

# x 716

# y 797

# Decision Trees --------------------------

# Vector of cp values to try

cp_vec <- seq(0, .1, length = 100)

price_rpart <- train(form = price ~ carat + depth + table + x + y,

data = data_train,

method = "rpart",

trControl = ctrl_cv,

tuneGrid = expand.grid(cp = cp_vec))

# Print key results

price_rpart

# Plot complexity parameter vs. error

plot(price_rpart)

# Print best complexity parameter

price_rpart$bestTune$cp

# [1] 0.00202

# Step 3: Estimate prediction accuracy from folds ----

# Get accuracy statistics across folds

resamples_price <- resamples(list(ridge = price_ridge,

lasso = price_lasso,

glm = price_glm))

# Print summary of accuracies

summary(resamples_price)

# MAE

# Min. 1st Qu. Median Mean 3rd Qu. Max. NA's

# ridge 1094 1100 1117 1137 1170 1217 0

# lasso 869 887 929 918 944 960 0

# glm 856 882 921 920 949 986 0

#

# RMSE

# Min. 1st Qu. Median Mean 3rd Qu. Max. NA's

# ridge 1545 1580 1609 1638 1703 1772 0

# lasso 1323 1479 1518 1509 1583 1593 0

# glm 1350 1429 1526 1509 1582 1702 0

#

# Rsquared

# Min. 1st Qu. Median Mean 3rd Qu. Max. NA's

# ridge 0.798 0.828 0.836 0.835 0.848 0.854 0

# lasso 0.827 0.846 0.858 0.858 0.868 0.902 0

# glm 0.819 0.849 0.863 0.860 0.870 0.888 0

# Step 4: Measure prediction Accuracy -------------------

# GLM ================================

# Predictions

glm_pred <- predict(price_glm,

newdata = data_test)

# Calculate aggregate accuracy

postResample(pred = glm_pred,

obs = criterion_test)

# RMSE Rsquared MAE

# 1654.017 0.832 944.854

# Ridge ================================

# Predictions

ridge_pred <- predict(price_ridge,

newdata = data_test)

# Calculate aggregate accuracy

postResample(pred = ridge_pred,

obs = criterion_test)

# RMSE Rsquared MAE

# 1650.541 0.832 1133.063

# Lasso ================================

# Predictions

lasso_pred <- predict(price_lasso,

newdata = data_test)

# Calculate aggregate accuracy

postResample(pred = lasso_pred,

obs = criterion_test)

# RMSE Rsquared MAE

# 1653.675 0.832 942.870

# Visualise Accuracy -------------------------

# Tidy competition results

accuracy <- tibble(criterion_test = criterion_test,

Regression = glm_pred,

Ridge = ridge_pred,

Lasso = lasso_pred) %>%

gather(model, prediction, -criterion_test) %>%

# Add error measures

mutate(se = prediction - criterion_test,

ae = abs(prediction - criterion_test))

# Calculate summaries

accuracy_agg <- accuracy %>%

group_by(model) %>%

summarise(mae = mean(ae)) # Calculate MAE (mean absolute error)

# Plot A) Scatterplot of truth versus predictions

ggplot(data = accuracy,

aes(x = criterion_test, y = prediction, col = model)) +

geom_point(alpha = .5) +

geom_abline(slope = 1, intercept = 0) +

labs(x = "True Prices",

y = "Predicted Prices",

title = "Predicting Diamond Prices",

subtitle = "Black line indicates perfect performance")

# Plot B) Violin plot of absolute errors

ggplot(data = accuracy,

aes(x = model, y = ae, fill = model)) +

geom_violin() +

geom_jitter(width = .05, alpha = .2) +

labs(title = "Fitting Absolute Errors",

subtitle = "Numbers indicate means",

x = "Model",

y = "Absolute Error (Log Transformed)") +

guides(fill = FALSE) +

annotate(geom = "label",

x = accuracy_agg$model,

y = accuracy_agg$mae,

label = round(accuracy_agg$mae, 2)) +

scale_y_continuous(trans='log')Datasets

| File | Rows | Columns |

|---|---|---|

| hitters_train.csv | 50 | 20 |

| hitters_test.csv | 213 | 20 |

| college_train.csv | 500 | 18 |

| college_test.csv | 277 | 18 |

| house_train.csv | 5000 | 21 |

| house_test.csv | 1000 | 21 |

The

hitters_trainandhitters_testdata are taken from theHittersdataset in theISLRpackage. They are data frames with observations of major league baseball players from the 1986 and 1987 seasons.The

college_trainandcollege_testdata are taken from theCollegedataset in theISLRpackage. They contain statistics for a large number of US Colleges from the 1995 issue of US News and World Report.The

house_trainandhouse_testdata come from https://www.kaggle.com/harlfoxem/housesalesprediction

Variable description of hitters_train and hitters_test

| Name | Description |

|---|---|

Salary |

1987 annual salary on opening day in thousands of dollars. |

AtBat |

Number of times at bat in 1986. |

Hits |

Number of hits in 1986. |

HmRun |

Number of home runs in 1986. |

Runs |

Number of runs in 1986. |

RBI |

Number of runs batted in in 1986. |

Walks |

Number of walks in 1986. |

Years |

Number of years in the major leagues. |

CAtBat |

Number of times at bat during his career. |

CHits |

Number of hits during his career. |

CHmRun |

Number of home runs during his career. |

CRuns |

Number of runs during his career. |

CRBI |

Number of runs batted in during his career. |

CWalks |

Number of walks during his career. |

League |

A factor with levels A and N indicating player’s league at the end of 1986. |

Division |

A factor with levels E and W indicating player’s division at the end of 1986. |

PutOuts |

Number of put outs in 1986. |

Assists |

Number of assists in 1986. |

Errors |

Number of errors in 1986. |

NewLeague |

A factor with levels A and N indicating player’s league at the beginning of 1987. |

Variable description of college_train and college_test

| Name | Description |

|---|---|

Private |

A factor with levels No and Yes indicating private or public university. |

Apps |

Number of applications received. |

Accept |

Number of applications accepted. |

Enroll |

Number of new students enrolled. |

Top10perc |

Pct. new students from top 10% of H.S. class. |

Top25perc |

Pct. new students from top 25% of H.S. class. |

F.Undergrad |

Number of fulltime undergraduates. |

P.Undergrad |

Number of parttime undergraduates. |

Outstate |

Out-of-state tuition. |

Room.Board |

Room and board costs. |

Books |

Estimated book costs. |

Personal |

Estimated personal spending. |

PhD |

Pct. of faculty with Ph.D.’s. |

Terminal |

Pct. of faculty with terminal degree. |

S.F.Ratio |

Student/faculty ratio. |

perc.alumni |

Pct. alumni who donate. |

Expend |

Instructional expenditure per student. |

Grad.Rate |

Graduation rate. |

Variable description of house_train and house_test

| Name | Description |

|---|---|

price |

Price of the house in $. |

bedrooms |

Number of bedrooms. |

bathrooms |

Number of bathrooms. |

sqft_living |

Square footage of the home. |

sqft_lot |

Square footage of the lot. |

floors |

Total floors (levels) in house. |

waterfront |

House which has a view to a waterfront. |

view |

Has been viewed. |

condition |

How good the condition is (Overall). |

grade |

Overall grade given to the housing unit, based on King County grading system. |

sqft_above |

Square footage of house apart from basement. |

sqft_basement |

Square footage of the basement. |

yr_built |

Built Year. |

yr_renovated |

Year when house was renovated. |

zipcode |

Zip code. |

lat |

Latitude coordinate. |

long |

Longitude coordinate. |

sqft_living15 |

Living room area in 2015 (implies some renovations). This might or might not have affected the lotsize area. |

sqft_lot15 |

lot-size area in 2015 (implies some renovations). |

Functions

Packages

| Package | Installation |

|---|---|

tidyverse |

install.packages("tidyverse") |

caret |

install.packages("caret") |

partykit |

install.packages("partykit") |

party |

install.packages("party") |

Functions

| Function | Package | Description |

|---|---|---|

trainControl() |

caret |

Define modelling control parameters |

train() |

caret |

Train a model |

predict(object, newdata) |

stats |

Predict the criterion values of newdata based on object |

postResample() |

caret |

Calculate aggregate model performance in regression tasks |

confusionMatrix() |

caret |

Calculate aggregate model performance in classification tasks |

Resources

from github.com/rstudio