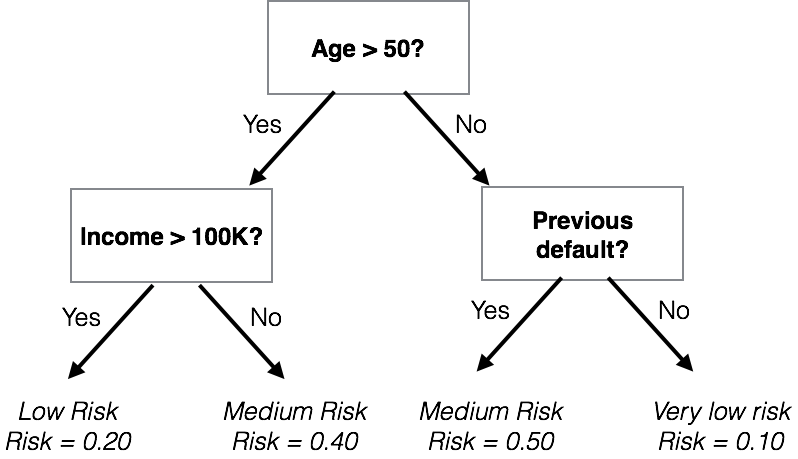

class: center, middle, inverse, title-slide # Machine Learning ### The R Bootcamp<br/>Twitter: <a href='https://twitter.com/therbootcamp'><span class="citation">@therbootcamp</span></a> ### April 2018 --- # What is machine learning? .pull-left6[ ### Algorithms autonomously learning from data. Given data, an algorithm tunes its *parameters* to match the data, understand how it works, and make predictions for what will occur in the future. <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/mldiagram_A.png" width="80%" style="display: block; margin: auto;" /> ] .pull-right4[ <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/machinelearningcartoon.png" width="70%" style="display: block; margin: auto;" /> ] --- # Everyone uses machine learning .pull-left6[ ### Everyone! - How does Google know what search results you want? - How does Amazon know what products to recommend? - How does Netflix decide what shows you'll want to watch next? - How do Tesla cars recognize objects and predict accidents? > Machine learning drives our algorithms for demand forecasting, product search ranking, product and deals recommendations, merchandising placements, fraud detection, translations, and much more. ~ Jeff Bezos, Amazon founder ] .pull-right4[ <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/mlexamples.png" width="100%" style="display: block; margin: auto;" /> ] --- # Predicting Disease .pull-left45[ ### Past "Training" Data | id|sex | age|fam_history |smoking | disease| |--:|:---|---:|:-----------|:-------|-------:| | 1|m | 46|Yes |TRUE | 0| | 2|m | 47|Yes |FALSE | 1| | 3|f | 42|No |FALSE | 1| | 4|m | 49|No |FALSE | 1| | 5|m | 41|No |TRUE | 1| | 6|m | 43|Yes |FALSE | 0| | 7|f | 45|Yes |FALSE | 1| | 8|m | 45|No |TRUE | 1| ] .pull-right45[ ### Future "Test" Data | id|sex | age|fam_history |smoking |disease | |--:|:---|---:|:-----------|:-------|:-------| | 91|m | 39|No |TRUE |? | | 92|m | 46|No |FALSE |? | | 93|m | 45|No |FALSE |? | | 94|m | 54|No |FALSE |? | | 95|m | 44|No |TRUE |? | | 96|m | 44|No |FALSE |? | | 97|f | 42|No |FALSE |? | | 98|m | 44|Yes |TRUE |? | ] --- # Predicting Sales .pull-left45[ ### Past "Training" Data |product | last_month| tweets|sentiment | sales| |:----------|----------:|------:|:---------|-----:| |speaker | 950| 17|++ | 956| |tv | 1013| 64|-- | 1076| |cable | 992| 109|- | 1026| |headphones | 1089| 110|-- | 1077| |phones | 1012| 80|+ | 919| |movies | 1032| 174|- | 956| |games | 942| 112|-- | 928| |drone | 1071| 97|+ | 1023| ] .pull-right45[ ### Future "Test" Data |product | last_month| tweets|sentiment |sales | |:-------|----------:|------:|:---------|:-----| |camera | 884| 118|++ |? | |office | 1025| 142|- |? | |network | 991| 207|-- |? | |storage | 1176| 197|+ |? | |watch | 986| 90|++ |? | |jewelry | 989| 240|++ |? | |glasses | 931| -78|-- |? | |vape | 978| 162|++ |? | ] --- # What is the basic machine learning process? <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/MLdiagram.png" width="95%" /> --- # Why do we separate training from prediction? .pull-left4[ Just because an algorithm can fit past (training) data well, does *not* necessarily mean that it will *predict* new data well. <br> <div class="figure" style="text-align: center"> <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/stockpen.jpg" alt="Anyone can come up with a model of past stock performance. Predicting future performance is much more difficult." width="70%" /> <p class="caption">Anyone can come up with a model of past stock performance. Predicting future performance is much more difficult.</p> </div> ] .pull-right6[ > "Prediction is difficult, especially when it is about the future" ~ Niels Bohr <div class="figure" style="text-align: center"> <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/bohr.jpg" alt="Niels Bohr, Nobel Laureate in Physics" width="20%" /> <p class="caption">Niels Bohr, Nobel Laureate in Physics</p> </div> > "An economist is an expert who will know tomorrow why the things he predicted yesterday didn't happen today." ~ Evan Esar <!-- > "A prediction about the direction of the stock market tells you nothing about where stocks are headed, but a whole lot about the person doing the predicting" ~ Warren Buffett --> ] --- # What do you think? <font size = 5>Can anyone come up with a model that will perfectly match past data but is worthless in predicting future data?</font><br><br> .pull-left45[ ### Past "Training" Data | id|sex | age|fam_history |smoking | disease| |--:|:---|---:|:-----------|:-------|-------:| | 1|m | 46|Yes |TRUE | 0| | 2|m | 47|Yes |FALSE | 1| | 3|f | 42|No |FALSE | 1| | 4|m | 49|No |FALSE | 1| | 5|m | 41|No |TRUE | 1| | 6|m | 43|Yes |FALSE | 0| | 7|f | 45|Yes |FALSE | 1| | 8|m | 45|No |TRUE | 1| ] .pull-right45[ ### Future "Test" Data | id|sex | age|fam_history |smoking |disease | |--:|:---|---:|:-----------|:-------|:-------| | 91|m | 39|No |TRUE |? | | 92|m | 46|No |FALSE |? | | 93|m | 45|No |FALSE |? | | 94|m | 54|No |FALSE |? | | 95|m | 44|No |TRUE |? | | 96|m | 44|No |FALSE |? | | 97|f | 42|No |FALSE |? | | 98|m | 44|Yes |TRUE |? | ] --- # Training (fitting) vs. Testing (prediction) <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/fittingpredictiondarts_A.png" width="70%" style="display: block; margin: auto;" /> --- # Training (fitting) vs. Testing (prediction) <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/fittingpredictiondarts_B.png" width="70%" style="display: block; margin: auto;" /> --- # Training (fitting) vs. Testing (prediction) <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/fittingpredictiondarts_C.png" width="70%" style="display: block; margin: auto;" /> --- # Training (fitting) vs. Testing (prediction) <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/fittingpredictiondarts_D.png" width="70%" style="display: block; margin: auto;" /> --- ## What machine learning algorithms are there? .pull-left55[ There thousands of machine learning algorithms from many different fields. - Computer vision, natural language processing, reinforcement learning... Wikipedia lists 57 *categories* (!) of machine learning algorithms <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/wikipediaml.png" width="80%" style="display: block; margin: auto;" /> ] .pull-right4[ <br><br> ### 3 Algorithims We will focus on 3 algorithms that apply to most tasks: | Algorithm|Complexity| |:------|:----| | Regression| Low / Medium | | Decision Trees| Low | | Random Forests| High | ] --- ## Two types of prediction tasks .pull-left45[ <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/classification_task.png" width="100%" style="display: block; margin: auto;" /> ] .pull-right45[ <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/regression_task.png" width="100%" style="display: block; margin: auto;" /> ] --- .pull-left6[ ## How do you fit and evaluate models in R? <br> | Step|Description| Note / Example | |:------|:---|:------------| | 1| Install model packages| `FFTrees` for Decision Trees<br>`randomForest` for Random Forests| | 2| Get data |Use your own, or get free online datasets| | 3| Train model on data and generate insights|Always look at help menus and online tutorials!| | 4| Predict new data, possibly with cross-validation|Packages such as `mlr` and `caret` can really help| ] .pull-right35[ <br> <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/machinelearning_r_ss.png" width="90%" style="display: block; margin: auto;" /> ] --- # How do you fit and evaluate models in R? .pull-left45[ ### Fitting a model ```r A_model <- A_fun(formula = y ~., data = data_train, ...) ``` | Argument| Description| Note | |------:|:----|:---| | formula| Formula indicating variables to use| `y ~ .` is often used as a catch-all | | data| The dataset for model training| | | ...| Optional other arguments| See the function help page for details| ] .pull-right5[ ### Evaluating a model ```r # Common ways to explore / use a model A_model # Print generic information names(A_model) # Show attributes summary(A_model) # Print summary information predict(A_model, # Predict test data newdata = data_test) plot(A_model) # Visualize the model ``` ] --- ## Regression with `glm()` .pull-left5[ In regression, the criterion is modeled as the weighted sum of predictors times *weights* `\(\beta_{1}\)`, `\(\beta_{2}\)` ### Loan Default: One could model the risk of defaulting on a loan as: `$$Risk = Age \times \beta_{age} + Income \times \beta_{income} + ...$$` Training a model means finding values of `\(\beta_{Age}\)` and `\(\beta_{Income}\)` that 'best' match the training data. <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/regression.png" width="50%" style="display: block; margin: auto;" /> ] .pull-right5[ Create regressions using the `glm()` function (part of base-R) ```r # glm() function for regression glm(formula = y ~., # Formula data = data_train, # Training data family, ...) # Optional arguments # Train glm model loan_glm_model <- glm(formula = risk ~ ., data = data_train) # Predict new data with glm model loan_glm_pred <- predict(loan_glm_model, newdata = data_test) ``` ] --- ## Decision Trees with `FFTrees::FFTrees()` .pull-left5[ In decision trees, the criterion is modeled as a sequence of logical Yes or No questions. ### Loan Default: <!-- --> ] .pull-right5[ Create decision trees using the `FFTrees` package ```r # Load the FFTrees package library(FFTrees) # Train FFTrees model loan_FFTrees_mod <- FFTrees(formula = risk ~ ., data = loan_data) # Predict new data with FFTrees model loan_FFTrees_pred <- predict(loan_FFTrees_mod, newdata = loan_test) ``` ] --- ## Random Forests with `randomForest::randomForest()` .pull-left5[ A Random Forest is a collection of many (hundreds, thousands) of decision trees <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/randomforest_diagram.png" width="90%" /> ] .pull-right5[ Create decision trees using the `randomForest` package ```r # Load the randomforest package library(randomForest) # Calculating a randomForest in R randomForest(formula = y ~., # Formula data = data_train, # Training data ntree, mtry) # Optional # Train randomForest model loan_rf_model <- randomForest(formula = risk ~ ., data = loan_data) # Predict new data with model loan_rf_pred <- predict(loan_rf_model, newdata = loan_test) ``` ] --- # How do I do machine learning in R? .pull-left6[ In the practical, we will go through the basic steps "by hand" so you can see the process: ```r # Create training and test data data_train <- ... data_test <- ... # Train models on training data model_A <- A_fun(formula = y ~ ., data = data_train) # Model A predictions pred_A <- predict(model_A, newdata = data_test) # Calculate Model A error pred_err_A <- mean(abs(pred_A - data_test$y)) # Compare to Models B, C, D... ``` ] .pull-right35[ When you do lots of machine learning, the `caret` and `mlr` packages can automate much of the the machine learning process. <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/mlrcaret.png" width="95%" style="display: block; margin: auto;" /> ] --- ## Machine Learning Live Demo & Practical <p><font size=6><b><a href="https://therbootcamp.github.io/BaselRBootcamp_2018April/_sessions/D2S3_MachineLearning/MachineLearning_practical.html">Link to Machine Learning practical</a> <!-- --- --> <!-- # What is the history of machine learning? --> <!-- - 1805 - 1809: Legendre and Gauss discover least squares. Soon after Galton defines **Regression** in a biological context, followed by Pearson for purely statistical analyses. --> <!-- - 1952: Arthur Samuel creates first computer learning program for learning checkers and coins the term **Machine Learning** in 1959. --> <!-- - 1957: Frank Rosenblatt creates first **Neural Network** to simulate the thought process of the human brain. --> <!-- - 1963: First algorithm for **Support Vector Machines** is developed by Vapnik & Chervonenkis. --> <!-- - 1967: **Nearest neighbor algorithm** is developed for classification --> <!-- - 1984: Breiman & Olshen publish the CART algorithm for **Decision Trees**, followed by Quinlan who publishes the ID3 algorithm followed by C4.5 --> <!-- - 1986: Rina Dechter introduces **Deep Learning**, with many subsequent updates in the 2000s. --> <!-- - 1995: Tin Kam Ho develops first algorithm for **Random Forests** --> <!-- Sources: Wikipedia, Bernard Marr, "A Short History of Machine Learning", Forbes. --> --- ### Old --- # Why do we separate training from prediction? - Data comes from two processes: *Signal* and *Noise* (aka Error). <br> <img src="MachineLearning_files/figure-html/unnamed-chunk-34-1.png" width="80%" style="display: block; margin: auto;" /> --- # Why do we separate training from prediction? - A good model is one that tries to capture the signal and ignore the noise - A bad model is one that captures too much unpredictable noise, <img src="MachineLearning_files/figure-html/unnamed-chunk-35-1.png" width="80%" style="display: block; margin: auto;" />