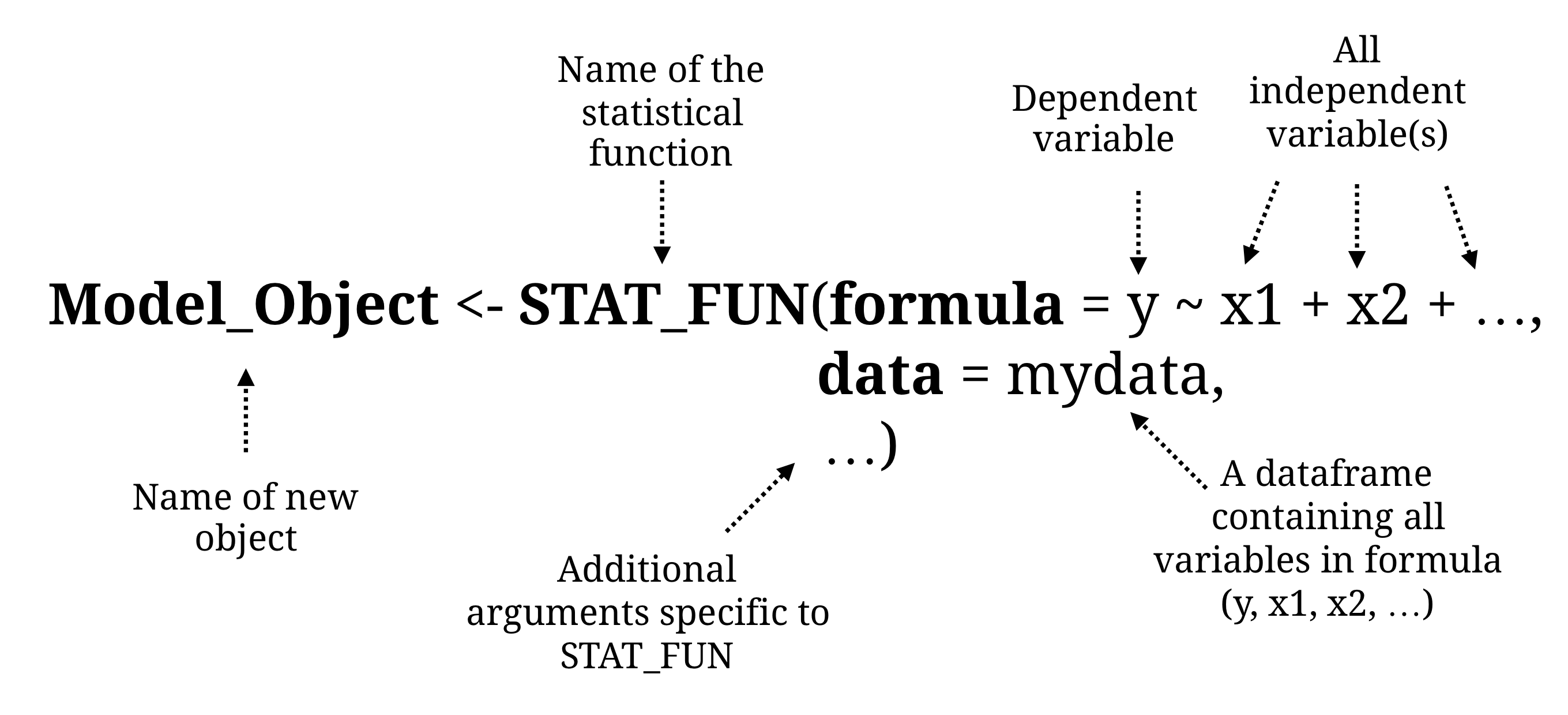

class: center, middle, inverse, title-slide # Statistics ### Basel R Bootcamp<br/><a href='https://therbootcamp.github.io'>www.therbootcamp.com</a><br/><a href='https://twitter.com/therbootcamp'><span class="citation">@therbootcamp</span></a> ### July 2018 --- layout: true <div class="my-footer"><span> <a href="https://therbootcamp.github.io/"><font color="#7E7E7E">BaselRBootcamp, July 2018</font></a>                                        <a href="https://therbootcamp.github.io/"><font color="#7E7E7E">www.therbootcamp.com</font></a> </span></div> --- # Stats? There is a package for that! .pull-left45[ <br> | Package| Models| |------:|:----| | `stats`|Generalized linear model| | `afex`| Anovas| | `lme4`| Mixed effects regression| | `rpart`| Decision Trees| | `BayesFactor`| Bayesian statistics| | `igraph`| Network analysis| | `neuralnet`| Neural networks| | `MatchIt`| Matching and causal inference| | `survival`| Longitudinal survival analysis| | ...| Anything you can ever want!| ] .pull-right5[ <p align="center"> <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/statistical_procedures.png" height="450px" vspace="20"> </p> ] --- .pull-left45[ # In this session... 1 - Basic structure and arguments of most <high>statistical functions</high> - `formula`: Specify your <high>variables</high> - `data`: A <high>data frame</high> containing variables. 2 - Simple <high>`htest`</high> objects - `t.test()`, `cor`relation 3 - (Generalized) <high>linear model</high> - `lm()`, `glm()`, `aov()` 4 - Explore statistical objects - `MODEL$NAME`, `print()`, `summary()`, `names()`, `predict()`, `plot()` 5 - Conduct simulations ] .pull-right5[ ```r # t-test comparing height based on gender t.test(formula = height ~ sex, data = baselers) # Regression model inc_glm <- glm(formula = income ~ ., data = baselers %>% select(-id)) # Summary information summary(inc_glm) # Dissect inc_glm$coefficients # Acess coefficients inc_glm$residuals # Access residuals ### Generate random data x1 <- rnorm(n = 100, mean = 10, sd = 5) x2 <- rnorm(n = 100, mean = 5, sd = 1) noise <- rnorm(n= 100, mean = 0, sd = 10) # Create y as a function of x1, x2, and noise y <- x1 + x2 + noise df <- data.frame(x1, x2, y) # Regress y on x1 and x2 lm(formula = y ~ ., data = df) ``` ] --- # Basic structure of statistical functions .pull-left4[ Statistical functions always require a <high>data frame</high> called `data`, e.g.,... |sex | age| height| weight| income| |:------|---:|------:|------:|------:| |male | 44| 174.3| 113.4| 6300| |male | 65| 180.3| 75.2| 10900| |female | 31| 168.3| 55.5| 5100| <br> They also require a <high>formula</high> that specifies a <high>dependent</high> variable (y) as a function of one or more <high>independent</high> variables (x1, x2, ...) in the form: <p align='center'><font size = 6>formula = y ~ x1 + x2 +...</font></p> ] .pull-right55[ How to create a statistical object: ```r # Example: Create regression object (my_glm) my_glm <- glm(formula = income ~ age + height, data = baselers) ``` <!-- --> ] --- .pull-left35[ # Look for optional arguments Statistical functions usually have many optional arguments. Each of these have <high>default</high> values. To customise a test, <high>look at the help menu</high> and specify arguments explicitly. ] .pull-right6[ <br><br> <u>Default vs. customised `glm()` (Generalized linear model)</u> ```r # Default glm(formula = income ~ age + education, data = baselers) # Customised glm(formula = eyecor ~ age + education, data = baselers, family = "binomial") # Logistic regression ``` <u>Default vs. customised `t-test`</u> ```r # Default t.test(formula = age ~ sex, data = baselers) # Customised t.test(formula = age ~ sex, data = baselers, alternative = "less", # One sided test var.equal = TRUE) # Assume equal variance ``` ] --- .pull-left35[ # Look for optional arguments ```r ?glm ``` <p align="center"> <img src="https://raw.githubusercontent.com/therbootcamp/Erfurt_2018June/master/_sessions/_image/glm_help.jpg"> </p> ] .pull-right6[ <br><br> <u>Default vs. customised `glm()` (Generalized linear model)</u> ```r # Default glm(formula = income ~ age + education, data = baselers) # Customised glm(formula = eyecor ~ age + education, data = baselers, family = "binomial") # Logistic regression ``` <u>Default vs. customised `t-test`</u> ```r # Default t.test(formula = age ~ sex, data = baselers) # Customised t.test(formula = age ~ sex, data = baselers, alternative = "less", # One sided test var.equal = TRUE) # Assume equal variance ``` ] --- # Simple hypothesis tests .pull-left45[ All of the basic <high>one and two sample hypothesis tests</high> are included in the `stats` package. These tests take either a <high>formula</high> for the argument `formula`, or <high>individual vectors</high> for the arguments `x`, and `y` <br> .pull-left6[ | Hypothesis Test| R Function| |------------:|------------:| | t-test| `t.test()`| | Correlation Test| `cor.test()`| | Chi-Square Test| `chisq.test()`| ] ] .pull-right5[ ### t-test with `t.test()` ```r # 2-sample t-test t.test(formula = income ~ sex, data = baselers) ``` ``` ## ## Welch Two Sample t-test ## ## data: income by sex ## t = 4, df = 8500, p-value = 6e-05 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## 120.6 352.2 ## sample estimates: ## mean in group female mean in group male ## 7650 7414 ``` ] --- # Simple hypothesis tests .pull-left45[ All of the basic <high>one and two sample hypothesis tests</high> are included in the `stats` package. These tests take either a <high>formula</high> for the argument `formula`, or <high>individual vectors</high> for the arguments `x`, and `y` <br> .pull-left6[ | Hypothesis Test| R Function| |------------:|------------:| | t-test| `t.test()`| | Correlation Test| `cor.test()`| | Chi-Square Test| `chisq.test()`| ] ] .pull-right5[ ### Correlation test with `cor.test()` ```r # Correlation test cor.test(x = baselers$age, y = baselers$income) # Version using formula (same result as above) cor.test(formula = ~ age + income, data = baselers) ``` ``` ## ## Pearson's product-moment correlation ## ## data: baselers$age and baselers$income ## t = 180, df = 8500, p-value <2e-16 ## alternative hypothesis: true correlation is not equal to 0 ## 95 percent confidence interval: ## 0.8882 0.8968 ## sample estimates: ## cor ## 0.8926 ``` ] --- # Regression with `glm()`, `lm()` .pull-left35[ How to <high>create a regression model</high> predicting, e.g., how much money people spend on `food` as a function of `income`? <br> Part of the `baselers` dataframe: .pull-left6[ | food| income| happiness| |----:|------:|---------:| | 610| 6300| 5| | 1550| 10900| 7| | 720| 5100| 7| | 680| 4200| 7| | 260| 4000| 5| ] <!-- `$$\Large food = \beta_{0} + \beta_{1} \times Inc + \beta_{1} \times Hap+ \epsilon$$` --> ] .pull-right6[ ### Generalized regression with `glm()` ```r # food (y) on income (x1) and happiness (x2) food_glm <- glm(formula = food ~ income + happiness, data = baselers) # Print food_glm food_glm ``` ``` ## ## Call: glm(formula = food ~ income + happiness, data = baselers) ## ## Coefficients: ## (Intercept) income happiness ## -302.089 0.101 52.205 ## ## Degrees of Freedom: 8509 Total (i.e. Null); 8507 Residual ## (1490 observations deleted due to missingness) ## Null Deviance: 1.27e+09 ## Residual Deviance: 6.06e+08 AIC: 119000 ``` ] --- # Customising formulas Include additional independent variables to formulas by "adding" them with <high>`+`</high> ```r # Include multiple terms with + my_glm <- glm(formula = income ~ food + alcohol + happiness + hiking, data = baselers) ``` To <high>include all variables</high> in a dataframe, use the catch-all notation <high>`formula = y ~ .`</high> ```r # Use y ~ . to include ALL variables my_glm <- glm(formula = income ~ ., data = baselers) ``` To include <high>interaction terms</high> use `x1 : x2` or `x1 * x2` (also includes main effects) instead of `x1 + x2` ```r # Include an interaction term between food and alcohol my_glm <- glm(formula = income ~ food * alcohol, data = baselers) ``` --- # Exploring statistical objects .pull-left35[ Explore statistical objects using <high>generic</high> functions such as `print()`, `summary()`, `predict()` and `plot()`. <high>Generic</high> functions different things depending on the <high>class label</high> of the object. ```r # Create statistical object obj <- STAT_FUN(formula = ..., data = ...) names(obj) # Elements print(obj) # Print summary(obj) # Summary plot(obj) # Plotting predict(obj, ..) # Predict ``` ] .pull-right6[ ```r # Create a glm object my_glm <- glm(formula = income ~ happiness + age, data = baselers) # print the my_glm object print(my_glm) ``` ``` ## ## Call: glm(formula = income ~ happiness + age, data = baselers) ## ## Coefficients: ## (Intercept) happiness age ## 1575 -100 149 ## ## Degrees of Freedom: 8509 Total (i.e. Null); 8507 Residual ## (1490 observations deleted due to missingness) ## Null Deviance: 6.33e+10 ## Residual Deviance: 1.28e+10 AIC: 145000 ``` ] --- # Exploring statistical objects .pull-left35[ Explore statistical objects using <high>generic</high> functions such as `print()`, `summary()`, `predict()` and `plot()`. <high>Generic</high> functions different things depending on the <high>class label</high> of the object. ```r # Create statistical object obj <- STAT_FUN(formula = ..., data = ...) names(obj) # Elements print(obj) # Print summary(obj) # Summary plot(obj) # Plotting predict(obj, ..) # Predict ``` ] .pull-right6[ ```r # Create a glm object my_glm <- glm(formula = income ~ happiness + age, data = baselers) # Show summary of the my_glm object summary(my_glm) ``` ``` ## ## Call: ## glm(formula = income ~ happiness + age, data = baselers) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -4045 -835 3 814 4899 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 1575.497 94.363 16.70 < 2e-16 *** ## happiness -100.431 12.520 -8.02 1.2e-15 *** ## age 149.312 0.815 183.31 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 1501842) ## ## Null deviance: 6.3307e+10 on 8509 degrees of freedom ## Residual deviance: 1.2776e+10 on 8507 degrees of freedom ## (1490 observations deleted due to missingness) ## AIC: 145186 ## ## Number of Fisher Scoring iterations: 2 ``` ] --- # Exploring statistical objects .pull-left35[ Explore statistical objects using <high>generic</high> functions such as `print()`, `summary()`, `predict()` and `plot()`. <high>Generic</high> functions different things depending on the <high>class label</high> of the object. ```r # Create statistical object obj <- STAT_FUN(formula = ..., data = ...) names(obj) # Elements print(obj) # Print summary(obj) # Summary plot(obj) # Plotting predict(obj, ..) # Predict ``` ] .pull-right6[ Many <high>statistical objects are lists</high>. Show elements with `names()`, access them with <high>`$`</high>. ```r # What are the named elements names(my_glm) ``` ``` ## [1] "coefficients" "residuals" "fitted.values" "effects" "R" ## [6] "rank" "qr" "family" "linear.predictors" "deviance" ``` <p align="left"> <img src="https://raw.githubusercontent.com/therbootcamp/Erfurt_2018June/master/_sessions/_image/list_tab.png" height="180px" vspace="10px"> </p> ] --- # Exploring statistical objects .pull-left35[ Explore statistical objects using <high>generic</high> functions such as `print()`, `summary()`, `predict()` and `plot()`. <high>Generic</high> functions different things depending on the <high>class label</high> of the object. ```r # Create statistical object obj <- STAT_FUN(formula = ..., data = ...) names(obj) # Elements print(obj) # Print summary(obj) # Summary plot(obj) # Plotting predict(obj, ..) # Predict ``` ] .pull-right6[ ```r # Look at coefficients my_glm$coefficients ``` ``` ## (Intercept) happiness age ## 1575.5 -100.4 149.3 ``` ```r # First 5 fitted values my_glm$fitted.values ``` ``` ## 1 2 3 4 5 6 7 8 ## 7643 10578 5501 4904 4657 10279 11373 6994 ``` ```r # First 5 residuals my_glm$residuals ``` ``` ## 1 2 3 4 5 6 ## -1343.1 322.2 -401.2 -703.9 -656.8 1120.8 ``` ] --- # `predict()` .pull-left4[ `predict(model, newdata)` allows you to use your `model` to <high>predict outcomes</high> for `newdata`. ```r last_year ``` | id| age| fitness| tattoos| income| |--:|---:|-------:|-------:|------:| | 1| 44| 7| 6| 6300| | 2| 65| 8| 5| 10900| ```r this_year ``` | id| age| fitness| tattoos|income | |---:|---:|-------:|-------:|:------| | 101| 21| 3| 4|NA | | 102| 23| 6| 8|NA | ] .pull-right55[ <high>Fit `model`</high> based on `leastyear` ```r # Create regression model predicting income model <- lm(formula = income ~ age + tattoos, data = lastyear) model$coefficients ``` ``` ## (Intercept) age tattoos ## 1418.3 145.7 -175.5 ``` Now use `model` to <high>`predict`</high> values for `thisyear` ```r # Predict the income of people in thisyear predict(object = model, newdata = thisyear) ``` ``` ## 1 2 ## 3776 3366 ``` ] --- .pull-left4[ # `tidy()` The `tidy()` function from the `broom` package <high>converts</high> the most important results of many statistical object like "glm" to a <high>data frame</high>. ```r # install and load broom install.packages('broom') library(broom) ``` <p align="center"> <img src="https://raw.githubusercontent.com/therbootcamp/Erfurt_2018June/master/_sessions/_image/broom_hex.png" height="200px" vspace="10"> </p> ] .pull-right55[ <br><br> ```r # Original printout my_glm ``` ``` ## ## Call: glm(formula = income ~ happiness + age, data = baselers) ## ## Coefficients: ## (Intercept) happiness age ## 1575 -100 149 ## ## Degrees of Freedom: 8509 Total (i.e. Null); 8507 Residual ## (1490 observations deleted due to missingness) ## Null Deviance: 6.33e+10 ## Residual Deviance: 1.28e+10 AIC: 145000 ``` ```r # Tidy printout tidy(my_glm) ``` ``` ## term estimate std.error statistic p.value ## 1 (Intercept) 1575.5 94.3629 16.696 1.328e-61 ## 2 happiness -100.4 12.5197 -8.022 1.180e-15 ## 3 age 149.3 0.8145 183.315 0.000e+00 ``` ] --- # Sampling Functions .pull-left4[ R gives you a host of functions for sampling data from common <high>statistical distributions</high> (see `?distributions`). Use these to easily <high>simulate data</high>: .pull-left55[ | Distribution| R Function| |------:|------------:| | Normal| `rnorm()`| | Uniform|`runif()`| | Beta|`rbeta()`| | Binomial|`rbinom()`| ] ] .pull-right5[ <p align="left"> <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/distributions_help.png" height = "450px"> </p> ] --- .pull-left45[ # sample() Use `sample()` to <high>draw a random sample</high> from a vector. ```r # Simulate 8 flips of a fair coin coin_flips <- sample(x = c("H", "T"), size = 8, prob = c(.5, .5), replace = TRUE) coin_flips ``` ``` ## [1] "T" "H" "T" "H" "H" "H" "T" "H" ``` ```r # Table of counts table(coin_flips) ``` ``` ## coin_flips ## H T ## 5 3 ``` ] .pull-right5[ <br> <p align="left"> <font size=5><high>The Birthday problem</high></font size=5>         <img src="https://raw.githubusercontent.com/therbootcamp/Erfurt_2018June/master/_sessions/_image/balloons.png" height = "100px"> </p> ```r # Create an empty room birthdays <- c() # While none of the birthdays are the same, # keep adding new ones while(all(!duplicated(birthdays))) { # Get new birthday new_day <- sample(x = 1:365, size = 1) # Add new_day to birthdays birthdays <- c(birthdays, new_day) } # Done! How many are in the room?? length(birthdays) ``` ``` ## [1] 51 ``` ] --- # `rnorm`, `runif()`, `...` .pull-left5[ Use the `rnorm()`, `runif()`, `...` functions to draw random <high>samples from probability distributions</high>. ```r # Random sample from Normal distribution mysamp <- rnorm(n = 100, # Number of samples mean = 10, # Mean of pop sd = 5) # SD of pop ... mysamp[1:5] # First 5 values ``` ``` ## [1] 4.265 6.066 10.210 9.924 18.168 ``` ```r mean(mysamp) # Mean ``` ``` ## [1] 10.24 ``` ```r sd(mysamp) # Standard deviation ``` ``` ## [1] 5.065 ``` ] .pull-right45[ <img src="Statistics_files/figure-html/unnamed-chunk-47-1.png" width="100%" /> ] --- .pull-left4[ # Other great statistics packages <br> |package|Description| |:----|:-----| |`afex`|Factorial experiments| |`lme4`|Mixed effects models| |`rstanarm`|Bayesian mixed effects models| |`BayesFactor`|Bayesian Models| |`forecast`|Time series| |`lavaan`|Latent variable and structural equation modelling| ] .pull-right55[ <br><br> <p align="center"> <img src="https://raw.githubusercontent.com/therbootcamp/Erfurt_2018June/master/_sessions/_image/BayesFactor_ss.png" height= "120px"> </p> <p align="center"> <img src="https://raw.githubusercontent.com/therbootcamp/Erfurt_2018June/master/_sessions/_image/lavaan_ss.jpg" height= "320px" vspace="35px"> </p> ] --- # Summary .pull-left45[ 1 - There are <high>packages for every statistical procedure</high> you can imagine in R. 2 - Most have <high>formula</high> and <high>data</high> arguments (among many others). 3 - Use <high>help files</high> to understand the arguments of functions! 4 - Once you've created a statistical object, use <high>generic functions</high> to explore it: `print()`, `names()`, `summary()`, etc. 5 - Use <high>random sampling</high> functions to run simulations. ] .pull-right5[ ```r ?t.test ``` <p align="left"> <img src="https://raw.githubusercontent.com/therbootcamp/therbootcamp.github.io/master/_sessions/_image/ttesthelp_ss.png" height= "380px" > </p> ] --- # Practical <p> <font size=6> <a href="https://therbootcamp.github.io/BaselRBootcamp_2018July/_sessions/Statistics/Statistics_practical.html"><b>Link to practical<b></a> </font> </p>