Fitting

|

Machine Learning with R Basel R Bootcamp |

|

adapted from xkcd.com

Overview

In this practical, you’ll practice the basics of fitting and exploring regression models in R.

By the end of this practical you will know how to:

- Fit a regression model to training data.

- Explore your fit object with generic functions.

- Evaluate the model’s fitting performance using accuracy measures such as MSE and MAE.

- Explore the effects of adding additional features.

Tasks

A - Setup

- Open your

BaselRBootcampR project. It should already have the folders1_Dataand2_Code. Make sure that the data file(s) listed in theDatasetssection are in your1_Datafolder

# Done!- Open a new R script. At the top of the script, using comments, write your name and the date. Save it as a new file called

Fitting_practical.Rin the2_Codefolder.

# Done!- Using

library()load the set of packages for this practical listed in the packages section above.

# Load packages necessary for this script

library(tidyverse)

library(caret)- For this practical, we’ll use a dataset of 388 U.S. Colleges. The data is stored in

college_train.csv. Using the following template, load the dataset into R ascollege_train:

# Load in college_train.csv data as college_train

college_train <- read_csv(file = "1_Data/college_train.csv")- Take a look at the first few rows of the dataset by printing it to the console.

college_train# A tibble: 500 x 18

Private Apps Accept Enroll Top10perc Top25perc F.Undergrad P.Undergrad

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Yes 1202 1054 326 18 44 1410 299

2 Yes 1415 714 338 18 52 1345 44

3 Yes 4778 2767 678 50 89 2587 120

4 Yes 1220 974 481 28 67 1964 623

5 Yes 1981 1541 514 18 36 1927 1084

6 Yes 1217 1088 496 36 69 1773 884

7 No 8579 5561 3681 25 50 17880 1673

8 No 833 669 279 3 13 1224 345

9 No 10706 7219 2397 12 37 14826 1979

10 Yes 938 864 511 29 62 1715 103

# … with 490 more rows, and 10 more variables: Outstate <dbl>,

# Room.Board <dbl>, Books <dbl>, Personal <dbl>, PhD <dbl>,

# Terminal <dbl>, S.F.Ratio <dbl>, perc.alumni <dbl>, Expend <dbl>,

# Grad.Rate <dbl>- Print the numbers of rows and columns using the

dim()function.

# Print number of rows and columns of college_train

dim(XXX)# Print number of rows and columns of college_train

dim(college_train)[1] 500 18- Look at the names of the dataframe with the

names()function

names(XXX)names(college_train) [1] "Private" "Apps" "Accept" "Enroll" "Top10perc"

[6] "Top25perc" "F.Undergrad" "P.Undergrad" "Outstate" "Room.Board"

[11] "Books" "Personal" "PhD" "Terminal" "S.F.Ratio"

[16] "perc.alumni" "Expend" "Grad.Rate" - Open the dataset in a new window using

View(). How does it look?

View(XXX)- Before starting to model the data, we need to do a little bit of data cleaning. Specifically, we need to convert all character columns to factors: Do this by running the following code:

# Convert character to factor

college_train <- college_train %>%

mutate_if(is.character, factor)B - Determine sampling procedure

In caret, we define the computational nuances of training using the trainControl() function. Because we’re learning the basics of fitting, we’ll set method = "none" for now. (Note that you would almost never do this for a real prediction task, you’ll see why later!)

# Set training resampling method to "none" to keep everything super simple

# for demonstration purposes. Note that you would almost never

# do this for a real prediction task!

ctrl_none <- trainControl(method = "none") Regression

C - Fit a regression model

- Using the code below, fit a regression model predicting graduation rate (

Grad.Rate) as a function of one featurePhD(percent of faculty with PhDs). Save the result as an objectGrad.Rate_glm. Specifically,…

- set the

formargument toGrad.Rate ~ PhD. - set the

dataargument to your training datacollege_train. - set the

methodargument to"glm"for regression. - set the

trControlargument toctrl_none, the object you created previously.

# Grad.Rate_glm: Regression Model

# Criterion: Grad.Rate

# Features: PhD

Grad.Rate_glm <- train(form = XX ~ XX,

data = XX,

method = "XX",

trControl = XX)# Grad.Rate_glm: Regression Model

# Criterion: Grad.Rate

# Features: PhD

Grad.Rate_glm <- train(form = Grad.Rate ~ PhD,

data = college_train,

method = "glm",

trControl = ctrl_none)- Explore the fitted model using the

summary()function, by setting the function’s first argument toGrad.Rate_glm.

# Show summary information from the regression model

summary(XXX)# Show summary information from the regression model

summary(Grad.Rate_glm)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-43.83 -10.44 0.49 10.93 41.47

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 41.372 3.382 12.23 < 2e-16 ***

PhD 0.330 0.045 7.33 9.1e-13 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for gaussian family taken to be 257)

Null deviance: 141641 on 499 degrees of freedom

Residual deviance: 127832 on 498 degrees of freedom

AIC: 4197

Number of Fisher Scoring iterations: 2- Look at the results, how do you interpret the regression coefficients? What is the general relationship between PhD and graduation rates? Does this make sense?

# For every increase of one in PhD (the percent of faculty with a PhD), the expected graduation rate increases by 0.33- Now it’s time to save the model’s fitted values! Do this by running the following code to save the fitted values as

glm_fit. Tip: Set the first argument toGrad.Rate_glm.

# Get fitted values from the Grad.Rate_glm model and save as glm_fit

glm_fit <- predict(XXX)# Get fitted values from the model and save as glm_fit

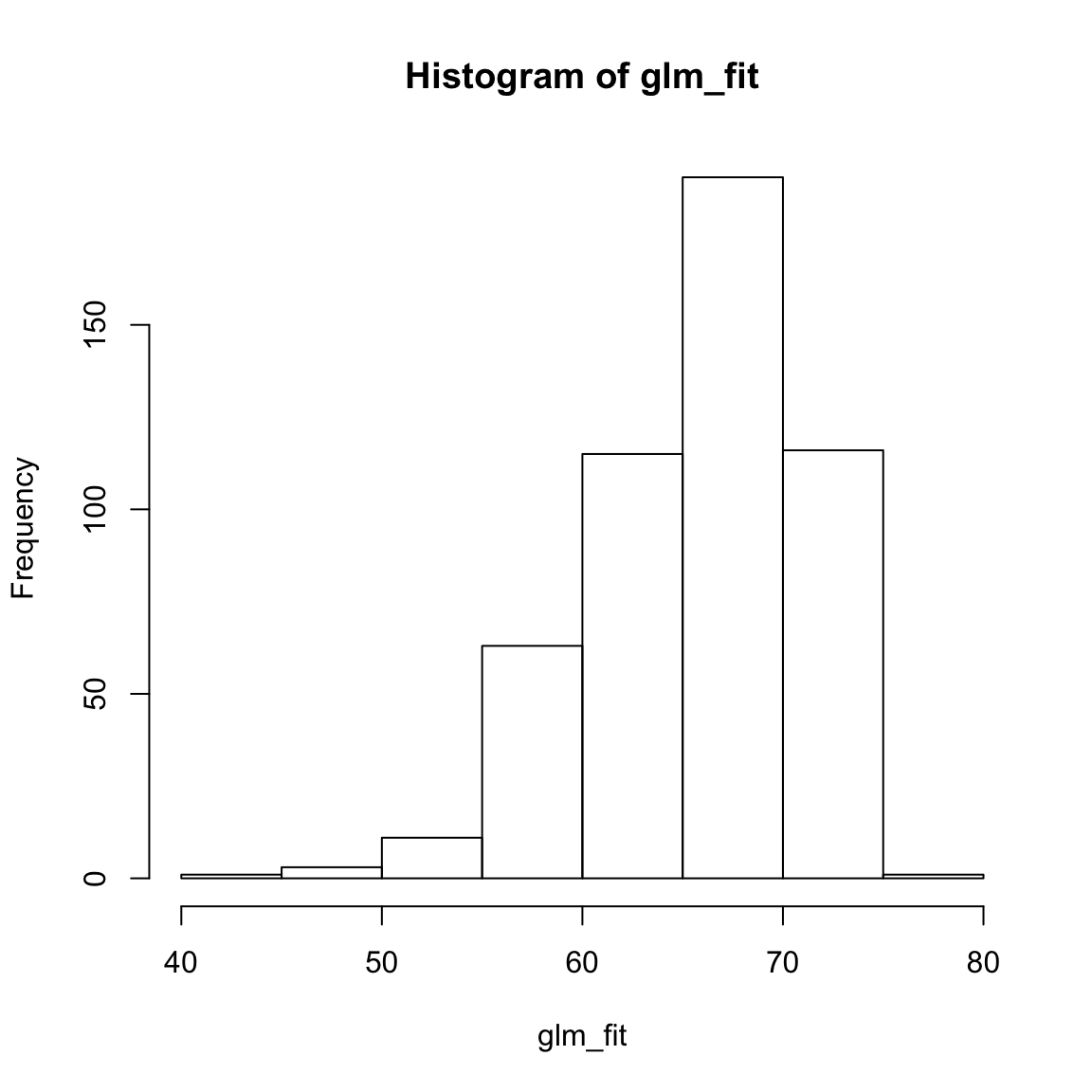

glm_fit <- predict(Grad.Rate_glm)- Plot the distribution of your

glm_fitobject using the code below - what are these values? Do they look reasonable? That is, are they in the range of what you expect the criterion to be?

# Plot glm_fit

hist(glm_fit)

# Yes, values appear to be within 40 and 80, which is what we expect from the truth population.D - Evaluate accuracy

- Now it’s time to compare your model fits to the true values. We’ll start by defining the vector

criterionas the actual graduation rates.

# Define criterion as Grad.Rate

criterion <- college_train$Grad.Rate- Let’s quantify our model’s fitting results. To do this, we’ll use the

postResample()function, with the fitted values as the prediction, and the criterion as the observed values.

Specifically,

- set the

predargument toglm_fit(your fitted values). - set the

obsargument tocriterion(a vector of the criterion values).

# Regression Fitting Accuracy

postResample(pred = XXX, # Fitted values

obs = XXX) # criterion values# Regression Fitting Accuracy

postResample(pred = glm_fit, # Fitted values

obs = criterion) # criterion values RMSE Rsquared MAE

15.9895 0.0975 12.8633 - You’ll see three values here. The easiest to understand is MAE which stands for “Mean Absolute Error” – in other words, “on average how far are the predictions from the true values?” A value of 0 means perfect prediction, so small values are good! How do you interpret these results?

# On average, the model fits are 12.8633 away from the true values.

# Whether this is 'good' or not depends on you :)- Now we’re ready to do some plotting. But first, we need to re-organise the data a bit. We’ll create two dataframes:

accuracy- Raw absolute errorsaccuracy_agg- Aggregate (i.e.; mean) absolute errors

# accuracy - a dataframe of raw absolute errors

accuracy <- tibble(criterion = criterion,

Regression = glm_fit) %>%

gather(model, prediction, -criterion) %>%

# Add error measures

mutate(ae = abs(prediction - criterion))

# accuracy_agg - Dataframe of aggregate errors

accuracy_agg <- accuracy %>%

group_by(model) %>%

summarise(mae = mean(ae)) # Calculate MAE (mean absolute error)- Print the

accuracyandaccuracy_aggobjects to see how they look.

accuracy

accuracy_agghead(accuracy) # Just printing the first few rows# A tibble: 6 x 4

criterion model prediction ae

<dbl> <chr> <dbl> <dbl>

1 65 Regression 67.1 2.12

2 69 Regression 58.5 10.5

3 83 Regression 66.8 16.2

4 49 Regression 61.5 12.5

5 80 Regression 65.5 14.5

6 67 Regression 52.9 14.1 head(accuracy_agg)# A tibble: 1 x 2

model mae

<chr> <dbl>

1 Regression 12.9- Using the code below, create a scatterplot showing the relationship between the true criterion values and the model fits.

# Plot A) Scatterplot of criterion versus predictions

ggplot(data = accuracy,

aes(x = criterion, y = prediction)) +

geom_point(alpha = .2) +

geom_abline(slope = 1, intercept = 0) +

labs(title = "Regression: One Feature",

subtitle = "Line indicates perfect performance",

x = "True Graduation Rates",

y = "Fitted Graduation Rates") +

xlim(0, 120) +

ylim(0, 120)- Look at the plot, how do you interpret this? Do you think the model did well or not in fitting the graduation rates?

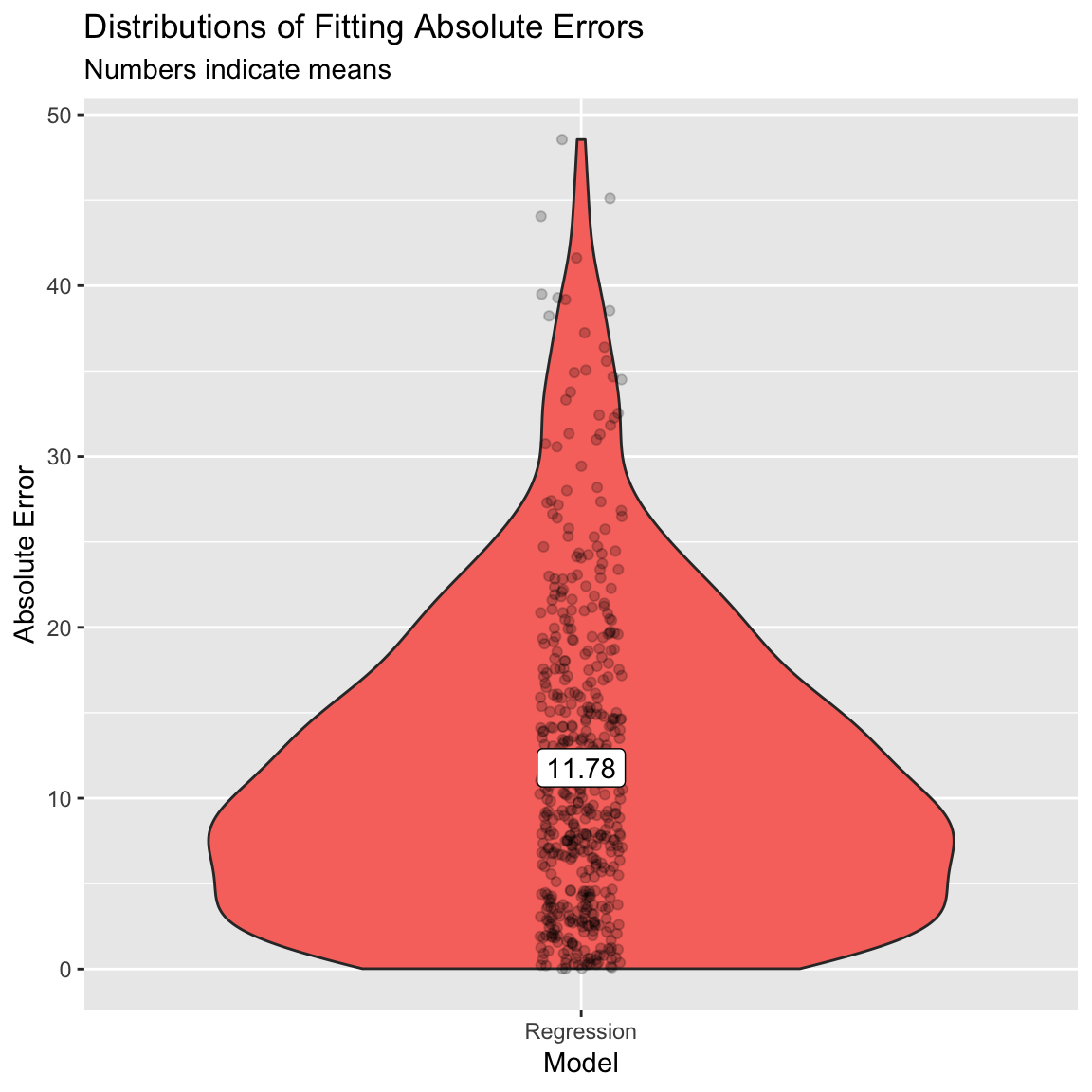

# No the model is not great, values do not fall very closely to the black diagonal line.- Let’s create a new violin plot showing the distribution of absolute errors of the model.

# Plot B) Violin plot of absolute errors

ggplot(data = accuracy,

aes(x = model, y = ae, fill = model)) +

geom_violin() +

geom_jitter(width = .05, alpha = .2) +

labs(title = "Distributions of Fitting Absolute Errors",

subtitle = "Numbers indicate means",

x = "Model",

y = "Absolute Error") +

guides(fill = FALSE) +

annotate(geom = "label",

x = accuracy_agg$model,

y = accuracy_agg$mae,

label = round(accuracy_agg$mae, 2))- What does the plot show you about the model fits? On average, how far away were the model fits from the true values?

# On average, the model fits are 12.86 away from the true criterion values.

# However, there is also quite a bit of variabilityE - Add more features

So far we have only used one feature (PhD), to predict Grad.Rate. Let’s try again, but now we’ll use a total of four features:

PhD- the percent of faculty with a PhD.Room.Board- room and board costs.Terminal- percent of faculty with a terminal degree.S.F.Ratio- student to faculty ratio.

- Using the same steps as above, create a regression model

Grad.Rate_glmwhich predictsGrad.Rateusing all 4 features (you can also call it something else if you want to save your original model!). Specifically,…

- set the

formargument toGrad.Rate ~ PhD + Room.Board + Terminal + S.F.Ratio. - set the

dataargument to your training datacollege_train. - set the

methodargument to"glm"for regression. - set the

trControlargument toctrl_none.

# Grad.Rate_glm: Regression Model

# Criterion: Grad.Rate

# Features: PhD, Room.Board, Terminal, S.F.Ratio

Grad.Rate_glm <- train(form = XXX ~ XXX + XXX + XXX + XXX,

data = XXX,

method = "XXX",

trControl = XXX)# Grad.Rate_glm: Regression Model

# Criterion: Grad.Rate

# Features: PhD, Room.Board, Terminal, S.F.Ratio

Grad.Rate_glm <- train(form = Grad.Rate ~ PhD + Room.Board + Terminal + S.F.Ratio,

data = college_train,

method = "glm",

trControl = ctrl_none)- Explore your model using

summary(). Which features seem to be important? Tip: set the first argument toGrad.Rate_glm.

summary(XXX)summary(Grad.Rate_glm)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-45.10 -9.63 0.40 10.07 48.55

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 38.635042 5.288467 7.31 1.1e-12 ***

PhD 0.217725 0.080744 2.70 0.0072 **

Room.Board 0.004674 0.000676 6.91 1.5e-11 ***

Terminal -0.021957 0.088196 -0.25 0.8035

S.F.Ratio -0.524664 0.176980 -2.96 0.0032 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for gaussian family taken to be 224)

Null deviance: 141641 on 499 degrees of freedom

Residual deviance: 110813 on 495 degrees of freedom

AIC: 4131

Number of Fisher Scoring iterations: 2- Save the model’s fitted values as a new object

glm_fit. I.e., set the first argument ofpredict()to yourGrad.Rate_glmmodel.

# Save new model fits

glm_fit <- predict(XXX)# Save new model fits

glm_fit <- predict(Grad.Rate_glm)- By comparing the model fits to the true criterion values using

postResample()calculate the Mean Absolute Error (MAE) of your new model that uses 4 features. How does this compare to your previous model that only used 1 feature? Specifically,…

- set the

predargument toglm_fit, your model fits. - set the

obsargument tocriterion, a vector of the true criterion values.

# New model fitting accuracy

postResample(pred = XXX, # Fitted values

obs = XXX) # criterion values# New model fitting accuracy

postResample(pred = glm_fit, # Fitted values

obs = criterion) # criterion values RMSE Rsquared MAE

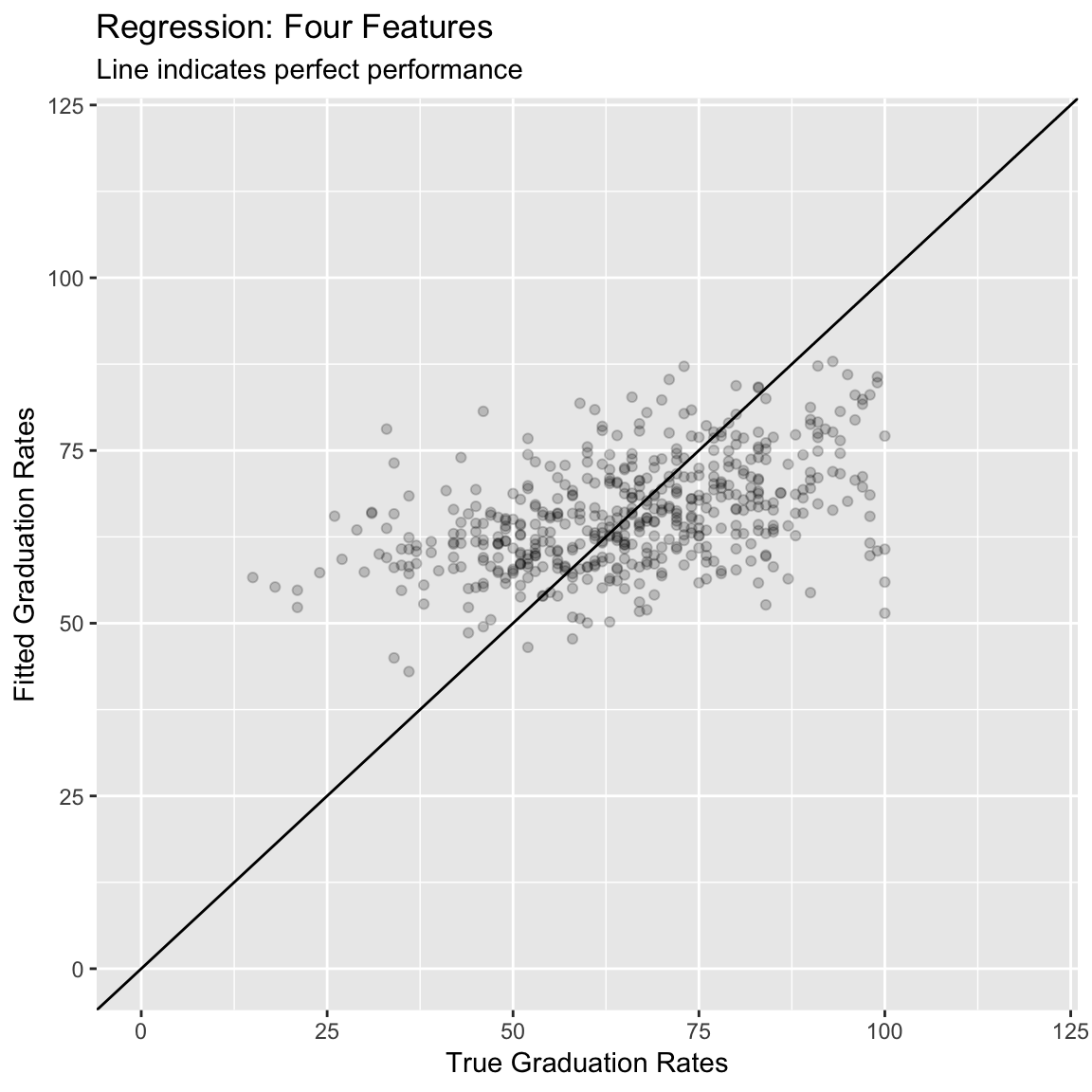

14.887 0.218 11.779 # The new MAE value is 11.779, it's better (smaller) than the previous model, but still not great (in my opinion)- (Optional). Create a scatter plot showing the relationship between your new model fits and the true values. How does this plot compare to your previous one?

# accuracy: a dataframe of raw absolute errors

accuracy <- tibble(criterion = criterion,

Regression = glm_fit) %>%

gather(model, prediction, -criterion) %>%

# Add error measures

mutate(ae = abs(prediction - criterion))

# accuracy_agg: Dataframe of aggregate errors

accuracy_agg <- accuracy %>%

group_by(model) %>%

summarise(mae = mean(ae)) # Calculate MAE (mean absolute error)

# Plot A) Scatterplot of criterion versus predictions

ggplot(data = accuracy,

aes(x = criterion, y = prediction)) +

geom_point(alpha = .2) +

geom_abline(slope = 1, intercept = 0) +

labs(title = "Regression: Four Features",

subtitle = "Line indicates perfect performance",

x = "True Graduation Rates",

y = "Fitted Graduation Rates") +

xlim(0, 120) +

ylim(0, 120)

- (Optional). Create a violin plot showing the distribution of absolute errors. How does this compare to your previous one?

# Plot B) Violin plot of absolute errors

ggplot(data = accuracy,

aes(x = model, y = ae, fill = model)) +

geom_violin() +

geom_jitter(width = .05, alpha = .2) +

labs(title = "Distributions of Fitting Absolute Errors",

subtitle = "Numbers indicate means",

x = "Model",

y = "Absolute Error") +

guides(fill = FALSE) +

annotate(geom = "label",

x = accuracy_agg$model,

y = accuracy_agg$mae,

label = round(accuracy_agg$mae, 2))

F - Use all features

Alright, now it’s time to use all features available!

- Using the same steps as above, create a regression model

glm_fitwhich predictsGrad.Rateusing all features in the dataset. Specifically,…

- set the

formargument toGrad.Rate ~ .. - set the

dataargument to the training datacollege_train. - set the

methodargument to"glm"for regression. - set the

trControlargument toctrl_none.

Grad.Rate_glm <- train(form = XXX ~ .,

data = XXX,

method = "glm",

trControl = XXX)Grad.Rate_glm <- train(form = Grad.Rate ~ .,

data = college_train,

method = "glm",

trControl = ctrl_none)- Explore your model using

summary(), which features seem to be important?

summary(XXX)summary(Grad.Rate_glm)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-38.10 -7.24 -0.58 7.51 47.10

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 31.010972 5.911481 5.25 2.3e-07 ***

PrivateYes 1.701840 2.114677 0.80 0.42135

Apps 0.001926 0.000572 3.37 0.00082 ***

Accept -0.001754 0.001046 -1.68 0.09417 .

Enroll 0.005550 0.002872 1.93 0.05387 .

Top10perc -0.049727 0.086281 -0.58 0.56466

Top25perc 0.206252 0.066972 3.08 0.00219 **

F.Undergrad -0.001069 0.000461 -2.32 0.02068 *

P.Undergrad -0.001294 0.000444 -2.92 0.00369 **

Outstate 0.001782 0.000297 6.01 3.7e-09 ***

Room.Board 0.000871 0.000721 1.21 0.22790

Books -0.000932 0.004089 -0.23 0.81988

Personal -0.001457 0.000998 -1.46 0.14494

PhD 0.104743 0.071027 1.47 0.14095

Terminal -0.101789 0.076321 -1.33 0.18293

S.F.Ratio 0.275943 0.191423 1.44 0.15008

perc.alumni 0.219944 0.061576 3.57 0.00039 ***

Expend -0.000683 0.000202 -3.39 0.00077 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for gaussian family taken to be 155)

Null deviance: 141641 on 499 degrees of freedom

Residual deviance: 74595 on 482 degrees of freedom

AIC: 3960

Number of Fisher Scoring iterations: 2- Save the model’s fitted values as a new object

glm_fit.

# Save new model fits

glm_fit <- predict(XXX)# Save new model fits

glm_fit <- predict(Grad.Rate_glm)- What is the Mean Absolute Error (MAE) of your new model that uses 17 features? How does this compare to your previous model that only used 1 feature?

# New model fitting accuracy

postResample(pred = glm_fit, # Fitted values

obs = criterion) # criterion values RMSE Rsquared MAE

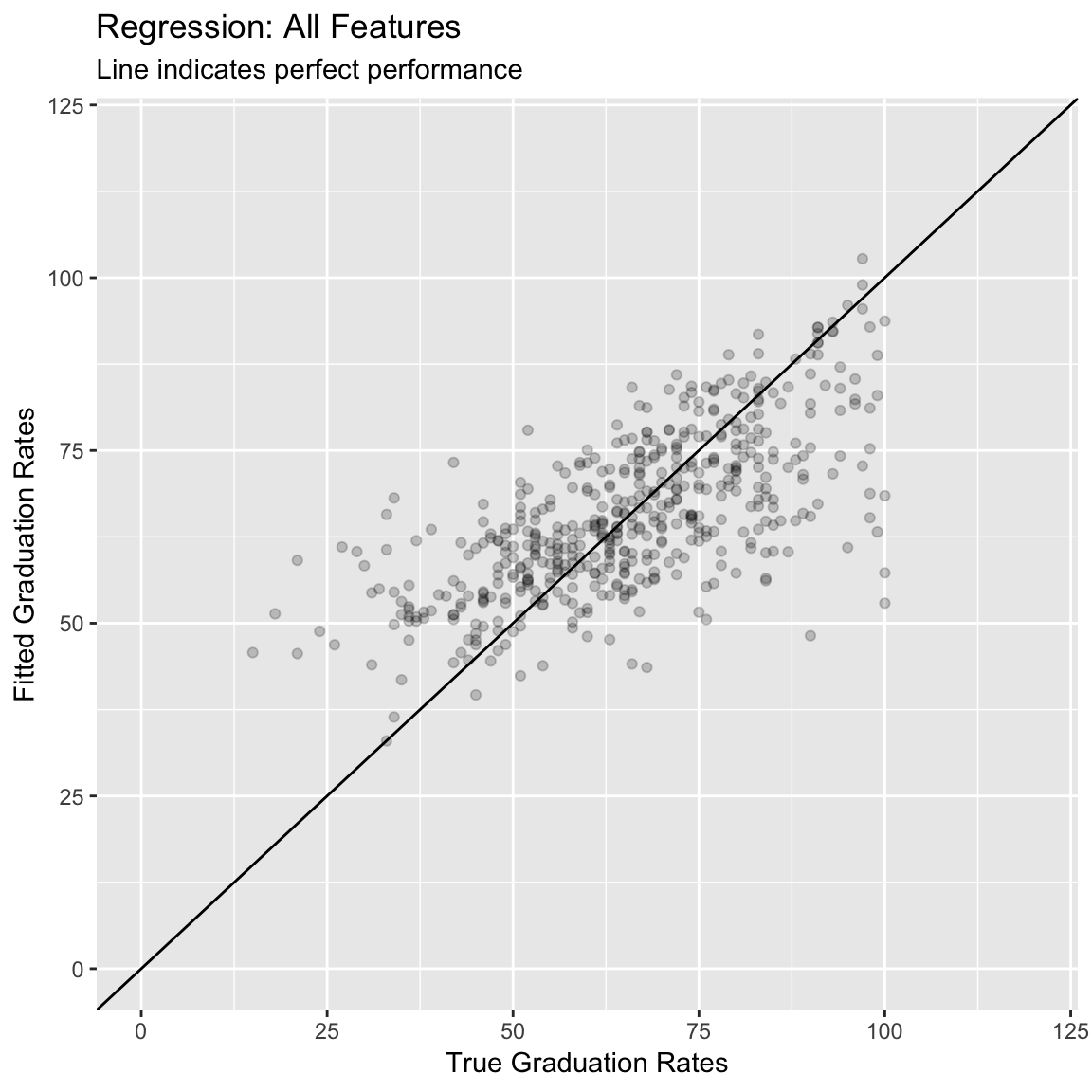

12.214 0.473 9.250 - (Optional). Create a scatter plot showing the relationship between your new model fits and the true values. How does this plot compare to your previous one?

# accuracy: a dataframe of raw absolute errors

accuracy <- tibble(criterion = criterion,

Regression = glm_fit) %>%

gather(model, prediction, -criterion) %>%

# Add error measures

mutate(ae = abs(prediction - criterion))

# accuracy_agg: Dataframe of aggregate errors

accuracy_agg <- accuracy %>%

group_by(model) %>%

summarise(mae = mean(ae)) # Calculate MAE (mean absolute error)

# Plot A) Scatterplot of criterion versus predictions

ggplot(data = accuracy,

aes(x = criterion, y = prediction)) +

geom_point(alpha = .2) +

geom_abline(slope = 1, intercept = 0) +

labs(title = "Regression: All Features",

subtitle = "Line indicates perfect performance",

x = "True Graduation Rates",

y = "Fitted Graduation Rates") +

xlim(0, 120) +

ylim(0, 120)

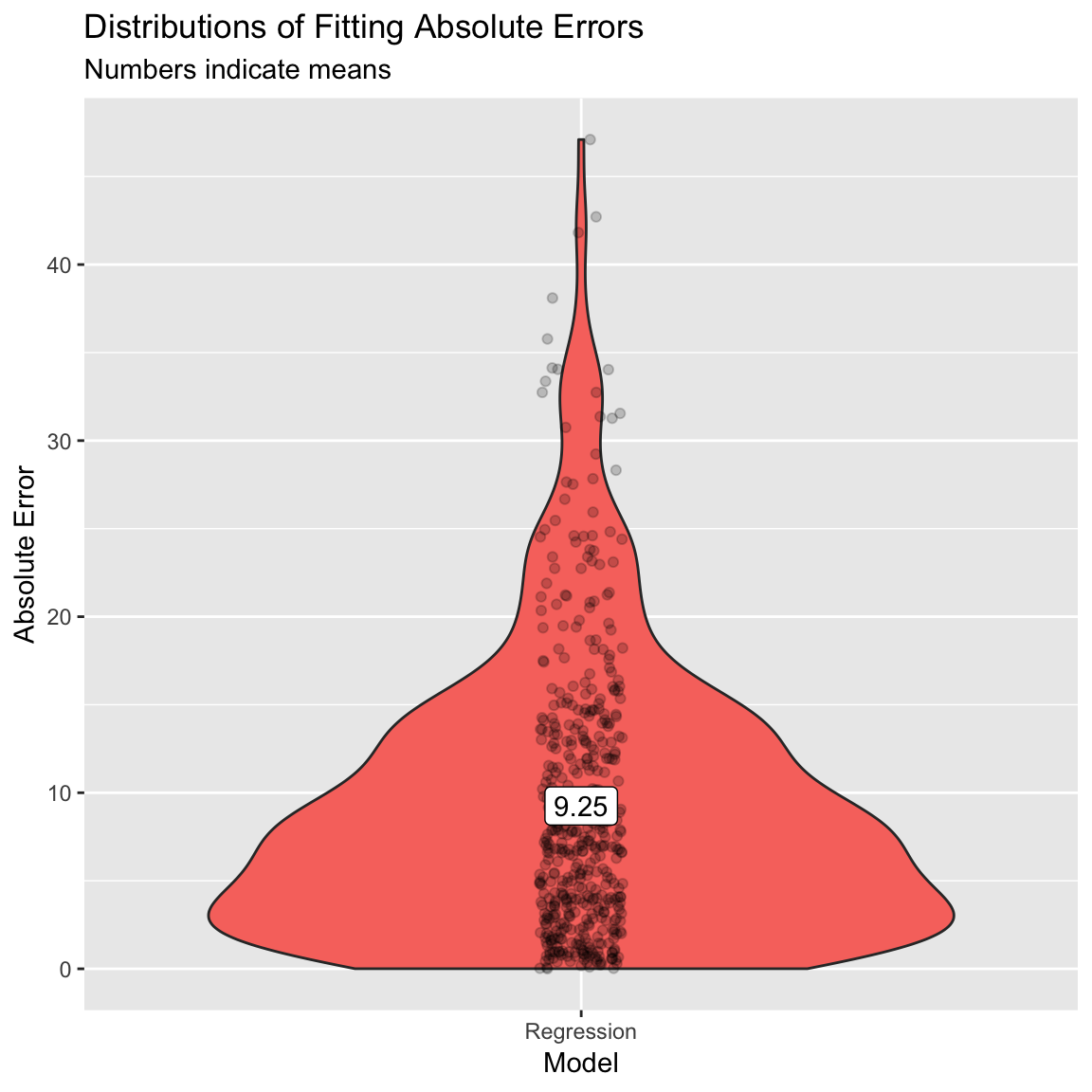

- (Optional). Create a violin plot showing the distribution of absolute errors. How does this compare to your previous one?

# Plot B) Violin plot of absolute errors

ggplot(data = accuracy,

aes(x = model, y = ae, fill = model)) +

geom_violin() +

geom_jitter(width = .05, alpha = .2) +

labs(title = "Distributions of Fitting Absolute Errors",

subtitle = "Numbers indicate means",

x = "Model",

y = "Absolute Error") +

guides(fill = FALSE) +

annotate(geom = "label",

x = accuracy_agg$model,

y = accuracy_agg$mae,

label = round(accuracy_agg$mae, 2))

Classification

G - Make sure your criterion is a factor!

Now it’s time to do a classification task! Recall that in a classification task, we are predicting a category, not a continuous number. In this task, we’ll predict whether or not a college is Private or Public, this is stored as the variable college_train$Private.

- In order to do classification training with

caret, all you need to do is make sure that the criterion is coded as a factor. To test whether it is coded as a factor, you can look at itsclassas follows.

# Look at the class of the variable Private, should be a factor!

class(college_train$Private)[1] "factor"- Now, we’ll save the Private column as a new object called

criterion.

# Define criterion as college_train$Private

criterion <- college_train$PrivateH - Fit a classification model

- Using

train(), createPrivate_glm, a regression model predicting the variablePrivate. Specifically,…

- set the

formargument toPrivate ~ .. - set the

dataargument to the training datacollege_train. - set the

methodargument to"glm". - set the

trControlargument toctrl_none.

# Fit regression model predicting Private

Private_glm <- train(form = XXX ~ .,

data = XXX,

method = "XXX",

trControl = XXX)# Fit regression model predicting private

Private_glm <- train(form = Private ~ .,

data = college_train,

method = "glm",

trControl = ctrl_none)- Explore the

Private_glmobject using thesummary()function.

# Explore the Private_glm object

summary(XXX)# Show summary information from the regression model

summary(Private_glm)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-2.9426 -0.0453 0.0272 0.1179 2.5261

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 1.25e+00 2.28e+00 0.55 0.5839

Apps -2.79e-04 2.71e-04 -1.03 0.3028

Accept -1.21e-03 5.48e-04 -2.20 0.0276 *

Enroll 3.90e-03 1.40e-03 2.80 0.0052 **

Top10perc -1.67e-02 3.82e-02 -0.44 0.6619

Top25perc 3.09e-02 2.76e-02 1.12 0.2640

F.Undergrad -4.14e-04 1.68e-04 -2.46 0.0140 *

P.Undergrad -1.76e-04 2.05e-04 -0.86 0.3899

Outstate 8.48e-04 1.55e-04 5.47 4.5e-08 ***

Room.Board 7.35e-04 3.60e-04 2.04 0.0410 *

Books 3.42e-03 1.83e-03 1.87 0.0619 .

Personal -6.20e-04 3.88e-04 -1.60 0.1097

PhD -5.63e-02 3.73e-02 -1.51 0.1315

Terminal -6.57e-02 3.68e-02 -1.79 0.0739 .

S.F.Ratio -1.91e-01 7.46e-02 -2.56 0.0104 *

perc.alumni 4.77e-02 2.79e-02 1.71 0.0876 .

Expend 2.81e-05 1.53e-04 0.18 0.8542

Grad.Rate 7.30e-03 1.48e-02 0.49 0.6220

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 609.16 on 499 degrees of freedom

Residual deviance: 144.29 on 482 degrees of freedom

AIC: 180.3

Number of Fisher Scoring iterations: 8- Look at the results, how do you interpret the regression coefficients? Which features seem important in predicting whether a school is private or not?

# Looking at the z statistics, Outstate, Enroll and S.F.Ratio (...) have quite large z-statisticsI - Access classification model accuracy

- Now it’s time to save the model’s fitted values! Do this by running the following code to save the fitted values as

glm_fit.

# Get fitted values from the Private_glm object

glm_fit <- predict(XXX)# Get fitted values from the Private_glm object

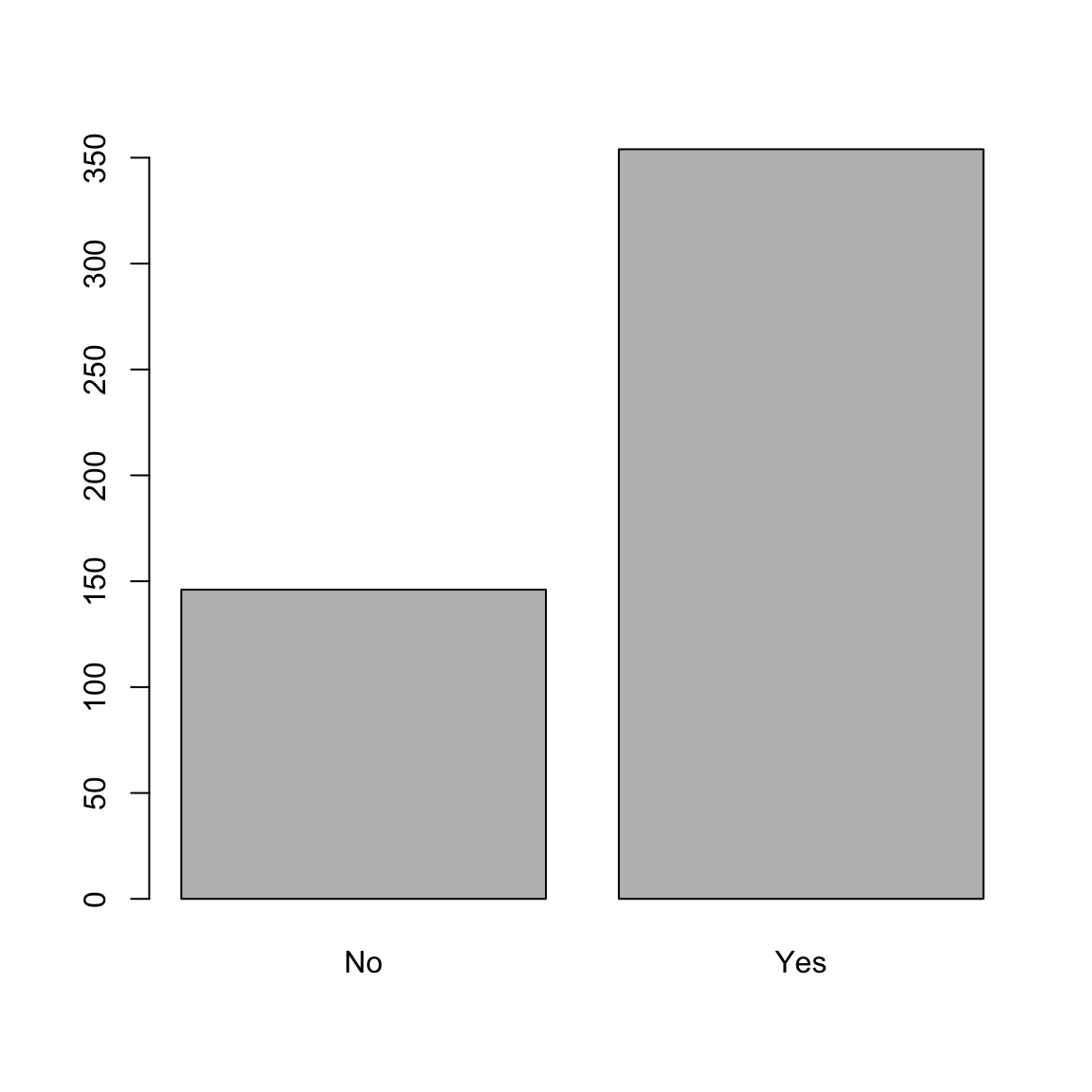

glm_fit <- predict(Private_glm)- Plot the values of your

glm_fitobject - what are these values? Do they look reasonable?

# Plot glm_fit

plot(glm_fit)

- Now it’s time to calculate model accuracy. To do this, we will use a new function called

confusionMatrix(). This function compares model predictions to a ‘reference’ (in our case, the criterion, and returns several summary statistics). In the code below, we’ll useglm_fitas the model predictions, and our already definedcriterionvector as the reference (aka, truth). Specifically,…

- set the

dataargument to yourglm_fitvalues. - set the

referenceargument to thecriterionvalues.

# Show accuracy of glm_fit versus the true criterion values

confusionMatrix(data = XXX, # This is the prediction!

reference = XXX) # This is the truth!# Show accuracy of glm_fit versus the true values

confusionMatrix(data = glm_fit, # This is the prediction!

reference = criterion) # This is the truth!Confusion Matrix and Statistics

Reference

Prediction No Yes

No 133 13

Yes 16 338

Accuracy : 0.942

95% CI : (0.918, 0.961)

No Information Rate : 0.702

P-Value [Acc > NIR] : <2e-16

Kappa : 0.861

Mcnemar's Test P-Value : 0.71

Sensitivity : 0.893

Specificity : 0.963

Pos Pred Value : 0.911

Neg Pred Value : 0.955

Prevalence : 0.298

Detection Rate : 0.266

Detection Prevalence : 0.292

Balanced Accuracy : 0.928

'Positive' Class : No

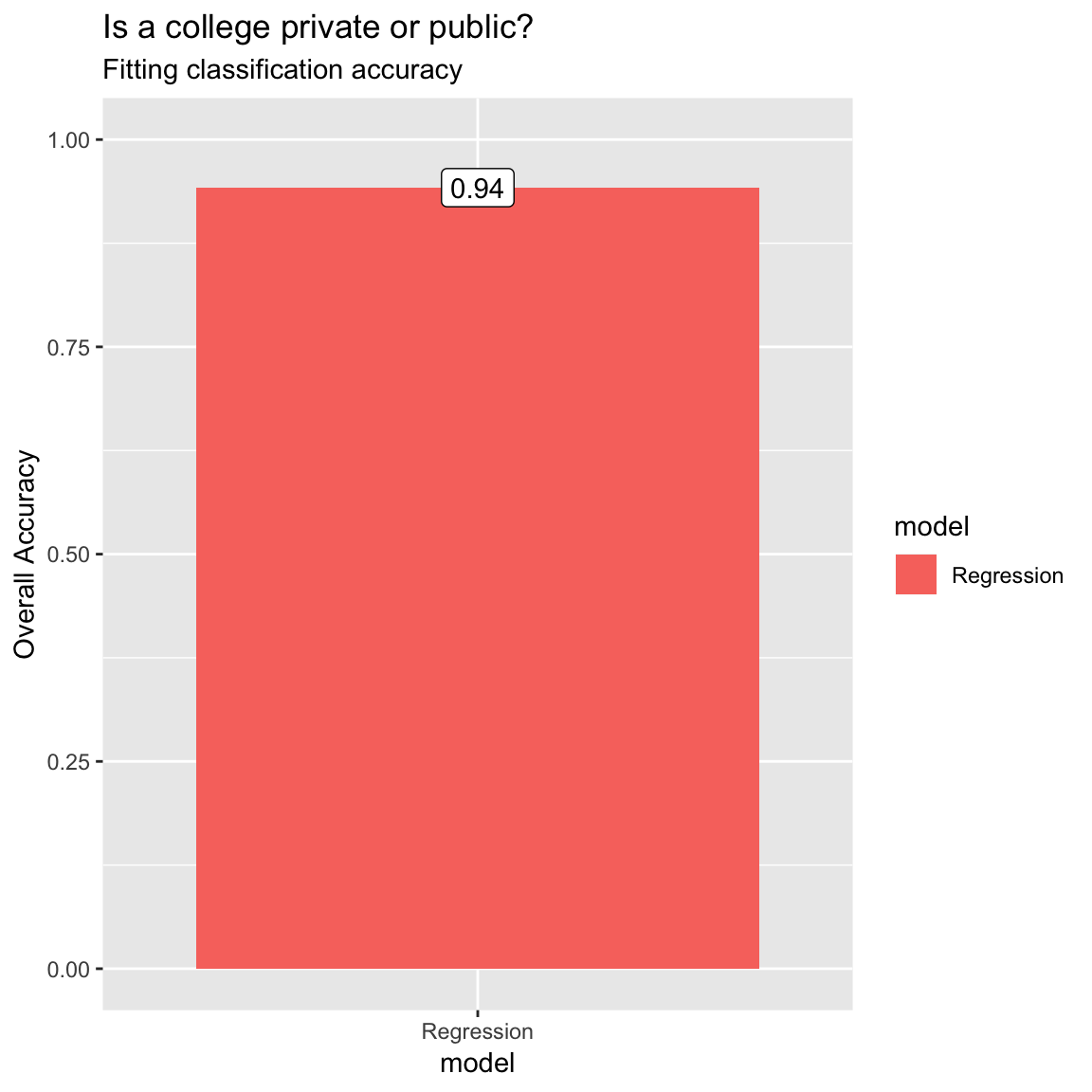

- Look at the results, what is the overall accuracy of the model? How do you interpret this?

# The overall accuracy is 0.942. Across all cases, the model fits the true class values 94.2% of the time.- What is the sensitivity? How do you interpret this number?

# The sensitivity is 0.893. Of those colleges that truly are private, the model fits are correct 89.3% of the time.- What is the positive predictive value? How do you interpret this number?

# The PPV is 0.911. Of those colleges that are predicted to be private, 91.1% truly are private.- What is the specificity? How do you interpret this number?

# The sensitivity is 0.963. Of those collges that truly are not private, the model fits are correct 96.3% of the time- What is the negative predictive value? How do you interpret this number?

# The NPV is 0.955. Of those colleges that are predicted to be public, 95.5% truly are public.- To visualize the accuracy of your classification models, use the following code to create a bar plot.

# Get overall accuracy from regression model

glm_accuracy <- confusionMatrix(data = glm_fit,

reference = criterion)$overall[1]

# Combine results into one table

accuracy <- tibble(Regression = glm_accuracy) %>%

gather(model, accuracy)

# Plot the results!

ggplot(accuracy, aes(x = model, y = accuracy, fill = model)) +

geom_bar(stat = "identity") +

labs(title = "Is a college private or public?",

subtitle = "Fitting classification accuracy",

y = "Overall Accuracy") +

ylim(c(0, 1)) +

annotate(geom = "label",

x = accuracy$model,

y = accuracy$accuracy,

label = round(accuracy$accuracy, 2))

Z - Challenges

- Conduct a regression analysis predicting the percent of alumni who donate to the college (

perc.alumni). How good can your regression model fit this criterion? Which variables seem to be important in predicting it?

mod <- train(form = perc.alumni ~ .,

data = college_train,

method = "glm",

trControl = ctrl_none)

summary(mod)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-24.48 -6.05 -0.30 5.12 31.93

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.22e+00 4.43e+00 0.95 0.34142

PrivateYes 1.48e+00 1.54e+00 0.96 0.33690

Apps -7.58e-04 4.21e-04 -1.80 0.07231 .

Accept -1.66e-03 7.62e-04 -2.18 0.02984 *

Enroll 6.88e-03 2.08e-03 3.31 0.00101 **

Top10perc 3.65e-02 6.30e-02 0.58 0.56276

Top25perc 7.30e-02 4.93e-02 1.48 0.13894

F.Undergrad -3.32e-04 3.38e-04 -0.98 0.32622

P.Undergrad 5.27e-05 3.27e-04 0.16 0.87191

Outstate 1.09e-03 2.19e-04 4.95 1e-06 ***

Room.Board -1.75e-03 5.21e-04 -3.35 0.00088 ***

Books -3.72e-04 2.99e-03 -0.12 0.90100

Personal -2.18e-03 7.23e-04 -3.01 0.00276 **

PhD -4.28e-02 5.19e-02 -0.82 0.41045

Terminal 1.40e-01 5.55e-02 2.53 0.01173 *

S.F.Ratio -2.55e-01 1.40e-01 -1.82 0.06873 .

Expend 8.48e-05 1.49e-04 0.57 0.56928

Grad.Rate 1.17e-01 3.28e-02 3.57 0.00039 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for gaussian family taken to be 82.5)

Null deviance: 73707 on 499 degrees of freedom

Residual deviance: 39764 on 482 degrees of freedom

AIC: 3645

Number of Fisher Scoring iterations: 2mod_predictions <- predict(mod)

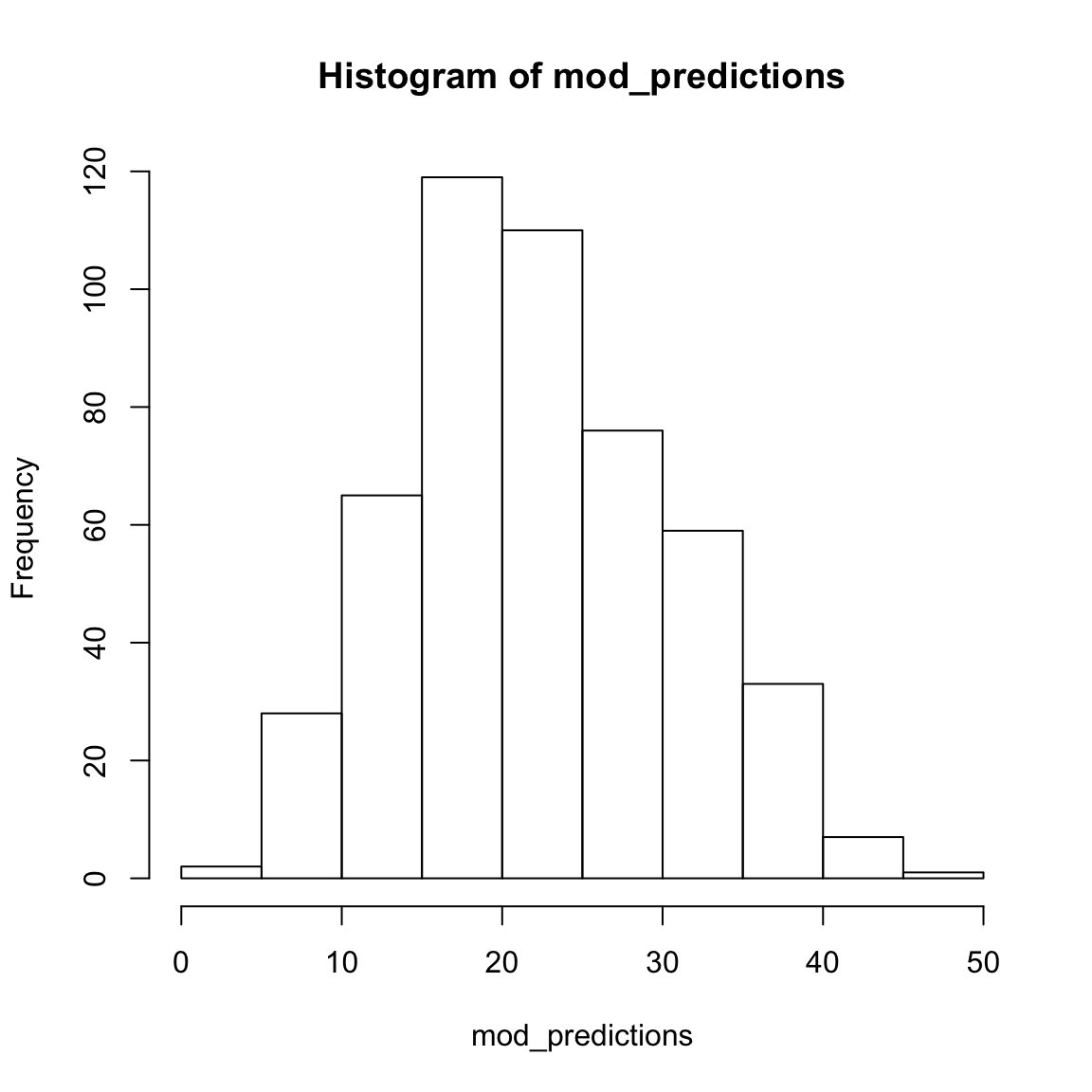

hist(mod_predictions)

postResample(pred = mod_predictions,

obs = college_train$perc.alumni) RMSE Rsquared MAE

8.918 0.461 7.024 - Conduct a classification analysis predicting whether or not a school is ‘hot’ – where a ‘hot’ school is one that receives at least 10,000 applications (Hint: use the code below to create the

hotvariable).

# Add a new factor criterion 'hot' which indicates whether or not a schol receives at least 10,000 applications

college_train <- college_train %>%

mutate(hot = factor(Apps >= 10000))mod_hot <- train(form = hot ~ .,

data = college_train,

method = "glm",

trControl = ctrl_none)

summary(mod_hot)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-7.52e-05 -2.00e-08 -2.00e-08 -2.00e-08 6.52e-05

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -6.34e+01 1.96e+05 0 1

PrivateYes -4.40e+00 6.42e+04 0 1

Apps 1.96e-02 1.62e+01 0 1

Accept -8.88e-03 1.83e+01 0 1

Enroll 1.17e-02 4.31e+01 0 1

Top10perc -8.07e-01 2.33e+03 0 1

Top25perc 5.81e-01 2.14e+03 0 1

F.Undergrad 1.40e-03 7.34e+00 0 1

P.Undergrad -1.65e-05 3.31e+00 0 1

Outstate -6.25e-04 1.15e+01 0 1

Room.Board 6.30e-03 1.24e+01 0 1

Books -6.36e-02 1.09e+02 0 1

Personal -3.81e-05 2.95e+01 0 1

PhD -1.39e+00 2.10e+03 0 1

Terminal -2.41e-01 4.59e+03 0 1

S.F.Ratio -8.91e-01 3.22e+03 0 1

perc.alumni 1.86e-01 2.74e+03 0 1

Expend 6.00e-04 5.57e+00 0 1

Grad.Rate 3.72e-01 1.89e+03 0 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 2.5364e+02 on 499 degrees of freedom

Residual deviance: 4.4973e-08 on 481 degrees of freedom

AIC: 38

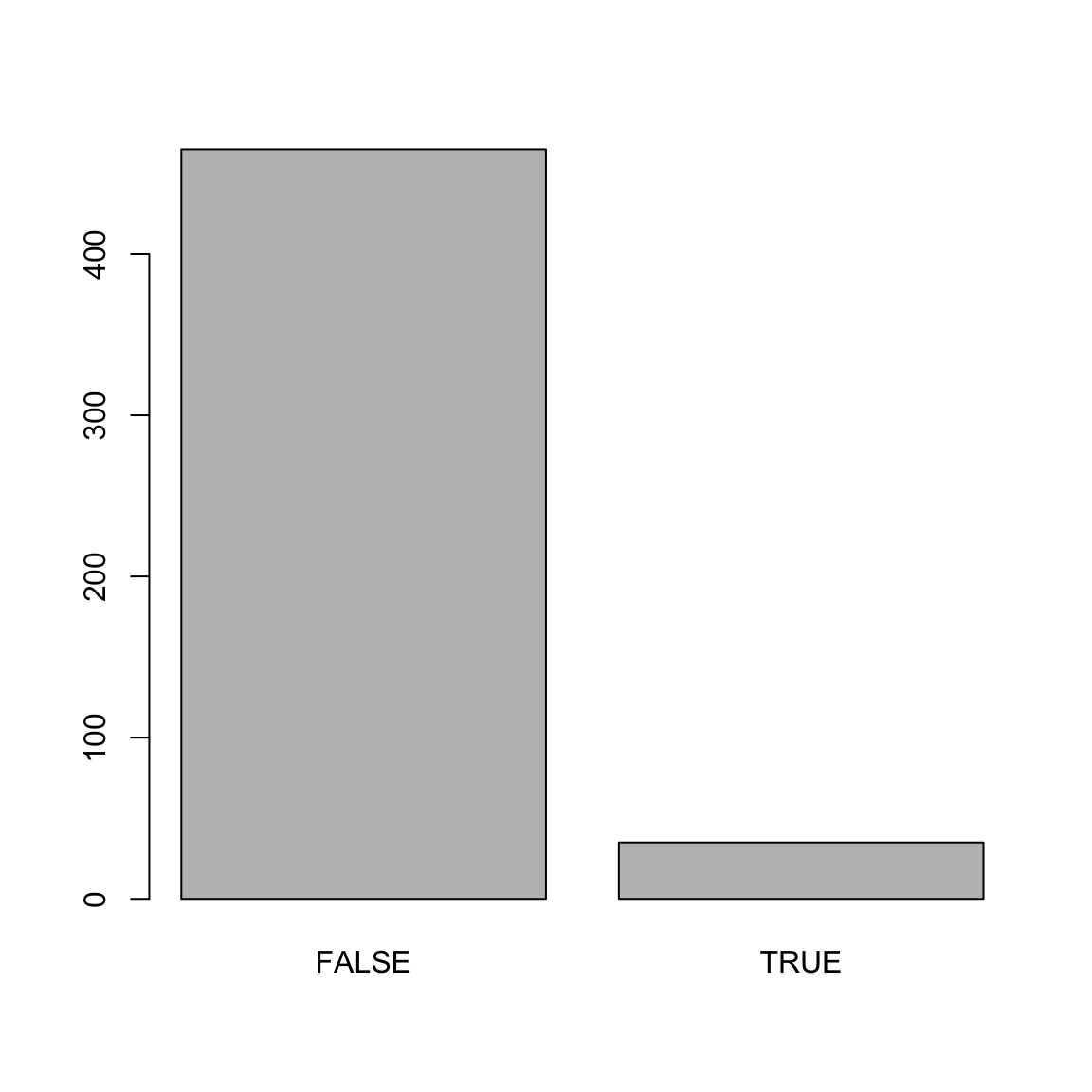

Number of Fisher Scoring iterations: 25mod_predictions <- predict(mod_hot)

plot(mod_predictions)

confusionMatrix(data = mod_predictions, # This is the prediction!

reference = college_train$hot) # This is the truth!Confusion Matrix and Statistics

Reference

Prediction FALSE TRUE

FALSE 465 0

TRUE 0 35

Accuracy : 1

95% CI : (0.993, 1)

No Information Rate : 0.93

P-Value [Acc > NIR] : <2e-16

Kappa : 1

Mcnemar's Test P-Value : NA

Sensitivity : 1.00

Specificity : 1.00

Pos Pred Value : 1.00

Neg Pred Value : 1.00

Prevalence : 0.93

Detection Rate : 0.93

Detection Prevalence : 0.93

Balanced Accuracy : 1.00

'Positive' Class : FALSE

- Did you notice anything strange in your model when doing the previous task? If you used all available predictors you will have gotten a warning that your model did not converge. That can happen if the maximum number of iterations (glm uses an iterative procedure when fitting the model) is reached. The default is a maximum of 25 iterations, see

?glm.control. To fix it just add the following code in yourtrain()functioncontrol = list(maxit = 75), and run it again.

mod_hot <- train(form = hot ~ .,

data = college_train,

method = "glm",

trControl = ctrl_none,

control = list(maxit = 75))

summary(mod_hot)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-6.30e-06 -2.10e-08 -2.10e-08 -2.10e-08 5.38e-06

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -7.72e+01 2.47e+06 0 1

PrivateYes -6.17e+00 8.40e+05 0 1

Apps 2.43e-02 2.10e+02 0 1

Accept -1.11e-02 2.25e+02 0 1

Enroll 1.48e-02 5.65e+02 0 1

Top10perc -1.03e+00 3.24e+04 0 1

Top25perc 7.45e-01 2.91e+04 0 1

F.Undergrad 1.86e-03 9.21e+01 0 1

P.Undergrad -3.63e-05 4.00e+01 0 1

Outstate -7.15e-04 1.54e+02 0 1

Room.Board 7.87e-03 1.50e+02 0 1

Books -7.96e-02 1.33e+03 0 1

Personal -1.79e-04 3.80e+02 0 1

PhD -1.74e+00 2.58e+04 0 1

Terminal -3.30e-01 5.87e+04 0 1

S.F.Ratio -1.16e+00 4.00e+04 0 1

perc.alumni 2.05e-01 3.42e+04 0 1

Expend 7.89e-04 7.32e+01 0 1

Grad.Rate 4.91e-01 2.41e+04 0 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 2.5364e+02 on 499 degrees of freedom

Residual deviance: 3.0770e-10 on 481 degrees of freedom

AIC: 38

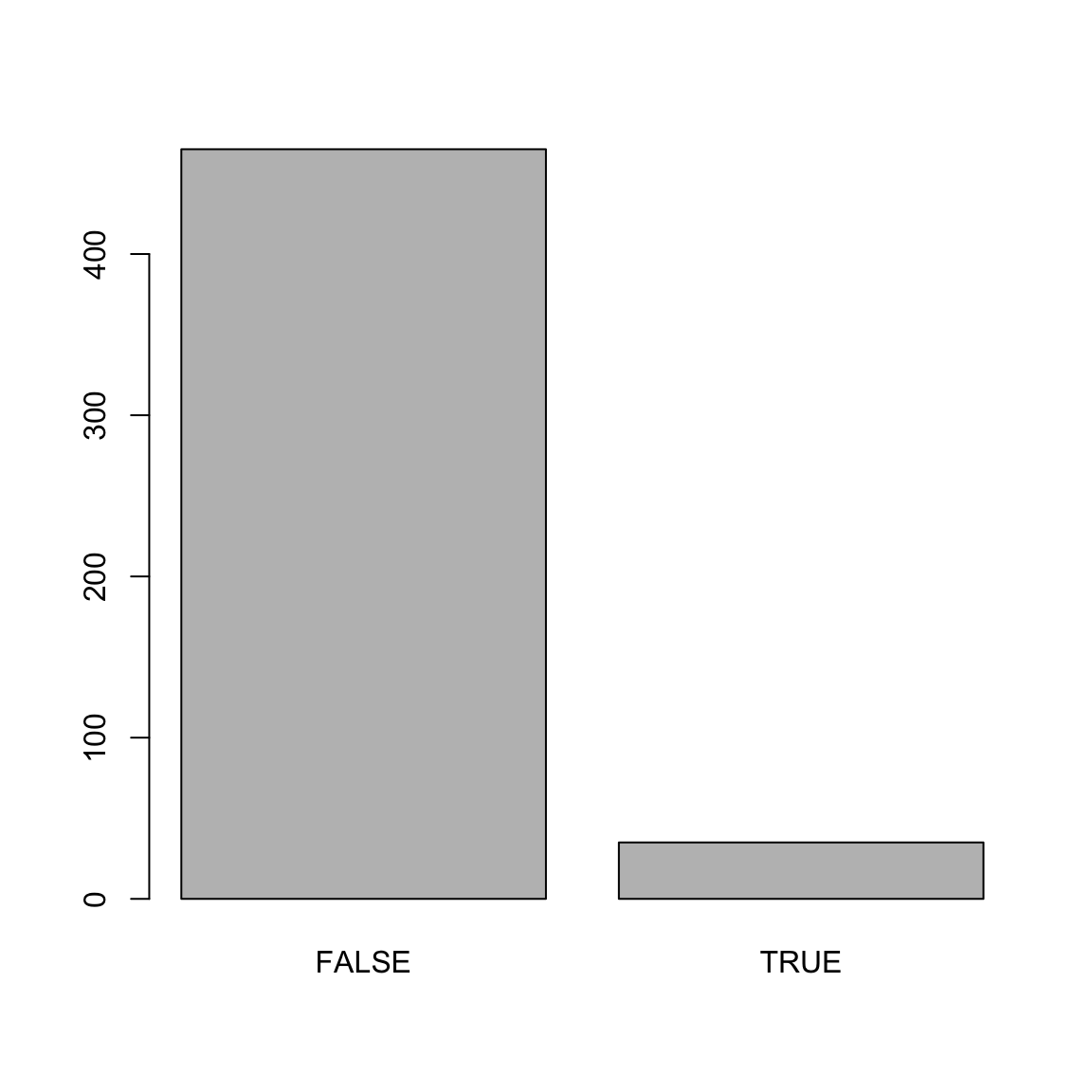

Number of Fisher Scoring iterations: 30mod_predictions <- predict(mod_hot)

plot(mod_predictions)

confusionMatrix(data = mod_predictions, # This is the prediction!

reference = college_train$hot) # This is the truth!Confusion Matrix and Statistics

Reference

Prediction FALSE TRUE

FALSE 465 0

TRUE 0 35

Accuracy : 1

95% CI : (0.993, 1)

No Information Rate : 0.93

P-Value [Acc > NIR] : <2e-16

Kappa : 1

Mcnemar's Test P-Value : NA

Sensitivity : 1.00

Specificity : 1.00

Pos Pred Value : 1.00

Neg Pred Value : 1.00

Prevalence : 0.93

Detection Rate : 0.93

Detection Prevalence : 0.93

Balanced Accuracy : 1.00

'Positive' Class : FALSE

- Now the model should have converged, but there is still another warning occurring:

glm.fit: fitted probabilities numerically 0 or 1 occurred. This can happen if very strong predictors occur in the dataset (see Venables & Ripley, 2002, p. 197). If you added all predictors (except again the college names), then this problem occurs because theAppsvariable, used to create the criterion, was also part of the predictors (plus some other variables that highly correlate withApps). Check the variable correlations (the code below will give you a matrix of bivariate correlations). You will learn an easier way of checking the correlations of variables in a later session.

# get correlation matrix of numeric variables

cor(college_train[,sapply(college_train, is.numeric)])- Now fit the model again but only select variables that are not directly related to the number of applications (here several solutions are possible, there is no clear-cut criterion about which variables to include and which to discard).

mod_hot <- train(form = hot ~ . - Apps -Enroll -Accept - F.Undergrad,

data = college_train,

method = "glm",

trControl = ctrl_none,

control = list(maxit = 75))

summary(mod_hot)

Call:

NULL

Deviance Residuals:

Min 1Q Median 3Q Max

-2.0603 -0.1783 -0.0609 -0.0177 3.0120

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -1.49e+01 4.42e+00 -3.37 0.00075 ***

PrivateYes -4.85e+00 1.32e+00 -3.67 0.00025 ***

Top10perc 2.42e-02 2.60e-02 0.93 0.35115

Top25perc 2.66e-02 2.73e-02 0.98 0.32940

P.Undergrad 5.22e-04 1.62e-04 3.23 0.00124 **

Outstate 8.41e-05 1.30e-04 0.65 0.51670

Room.Board 7.85e-04 3.33e-04 2.36 0.01826 *

Books -2.08e-03 2.33e-03 -0.90 0.37057

Personal 2.77e-04 4.08e-04 0.68 0.49704

PhD 1.65e-02 5.69e-02 0.29 0.77228

Terminal 1.97e-02 6.10e-02 0.32 0.74625

S.F.Ratio -2.56e-03 8.09e-02 -0.03 0.97480

perc.alumni -3.23e-02 3.21e-02 -1.01 0.31366

Expend 2.68e-05 6.19e-05 0.43 0.66542

Grad.Rate 6.40e-02 2.60e-02 2.47 0.01369 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 253.64 on 499 degrees of freedom

Residual deviance: 121.87 on 485 degrees of freedom

AIC: 151.9

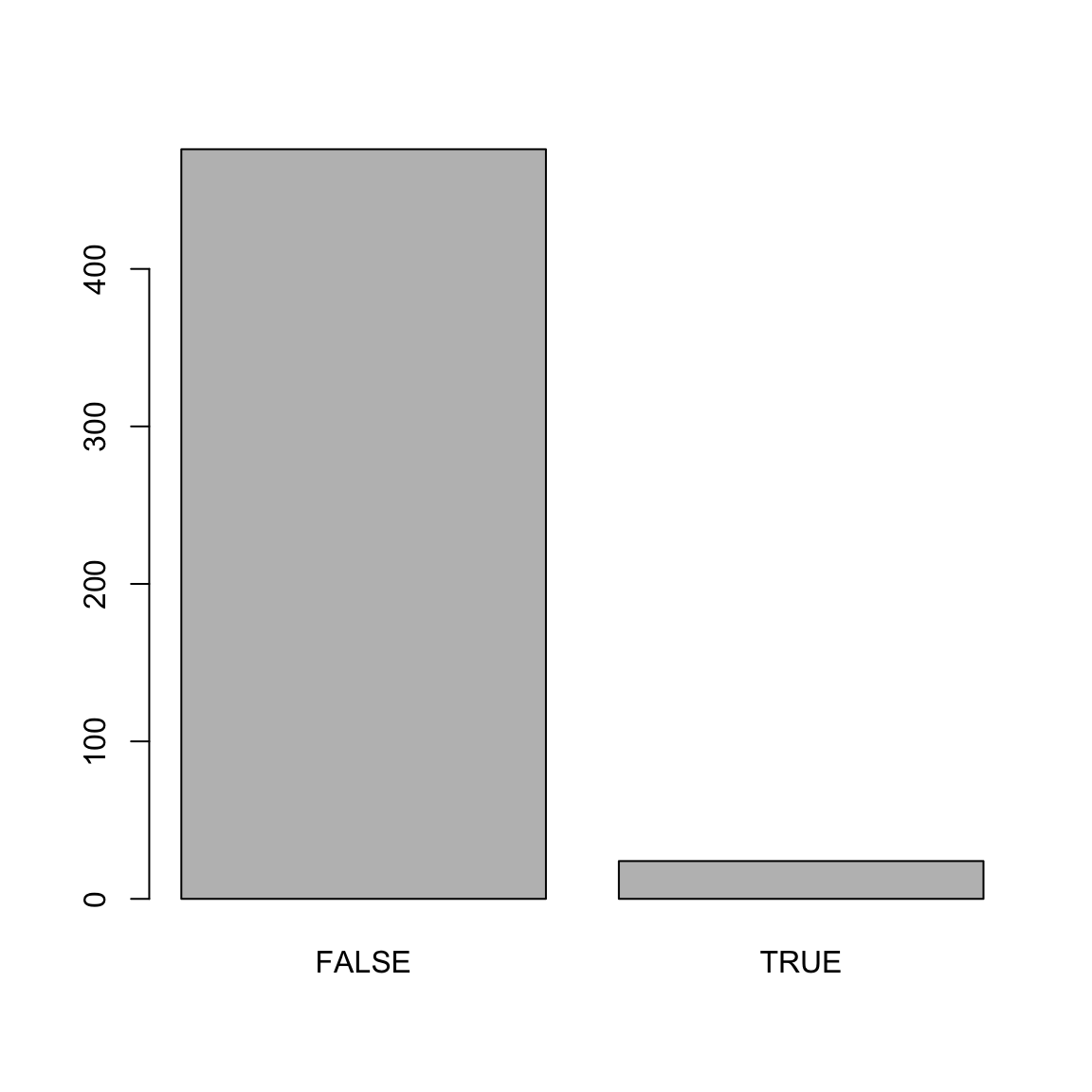

Number of Fisher Scoring iterations: 8mod_predictions <- predict(mod_hot)

plot(mod_predictions)

confusionMatrix(data = mod_predictions, # This is the prediction!

reference = college_train$hot) # This is the truth!Confusion Matrix and Statistics

Reference

Prediction FALSE TRUE

FALSE 458 18

TRUE 7 17

Accuracy : 0.95

95% CI : (0.927, 0.967)

No Information Rate : 0.93

P-Value [Acc > NIR] : 0.0429

Kappa : 0.551

Mcnemar's Test P-Value : 0.0455

Sensitivity : 0.985

Specificity : 0.486

Pos Pred Value : 0.962

Neg Pred Value : 0.708

Prevalence : 0.930

Detection Rate : 0.916

Detection Prevalence : 0.952

Balanced Accuracy : 0.735

'Positive' Class : FALSE

Examples

# Fitting and evaluating a regression model ------------------------------------

# Step 0: Load packages-----------

library(tidyverse) # Load tidyverse for dplyr and tidyr

library(caret) # For ML mastery

# Step 1: Load and Clean, and Explore Training data ----------------------

# I'll use the mpg dataset from the dplyr package in this example

# no need to load an external dataset

data_train <- read_csv("1_Data/mpg_train.csv")

# Convert all characters to factor

# Some ML models require factors

data_train <- data_train %>%

mutate_if(is.character, factor)

# Explore training data

data_train # Print the dataset

View(data_train) # Open in a new spreadsheet-like window

dim(data_train) # Print dimensions

names(data_train) # Print the names

# Step 2: Define training control parameters -------------

# In this case, I will set method = "none" to fit to

# the entire dataset without any fancy methods

# such as cross-validation

train_control <- trainControl(method = "none")

# Step 3: Train model: -----------------------------

# Criterion: hwy

# Features: year, cyl, displ, trans

# Regression

hwy_glm <- train(form = hwy ~ year + cyl + displ + trans,

data = data_train,

method = "glm",

trControl = train_control)

# Look at summary information

summary(hwy_glm)

# Step 4: Access fit ------------------------------

# Save fitted values

glm_fit <- predict(hwy_glm)

# Define data_train$hwy as the true criterion

criterion <- data_train$hwy

# Regression Fitting Accuracy

postResample(pred = glm_fit,

obs = criterion)

# RMSE Rsquared MAE

# 3.246182 0.678465 2.501346

# On average, the model fits are 2.8 away from the true

# criterion values

# Step 5: Visualise Accuracy -------------------------

# Tidy competition results

accuracy <- tibble(criterion = criterion,

Regression = glm_fit) %>%

gather(model, prediction, -criterion) %>%

# Add error measures

mutate(se = prediction - criterion,

ae = abs(prediction - criterion))

# Calculate summaries

accuracy_agg <- accuracy %>%

group_by(model) %>%

summarise(mae = mean(ae)) # Calculate MAE (mean absolute error)

# Plot A) Scatterplot of criterion versus predictions

ggplot(data = accuracy,

aes(x = criterion, y = prediction, col = model)) +

geom_point(alpha = .2) +

geom_abline(slope = 1, intercept = 0) +

labs(title = "Predicting mpg$hwy",

subtitle = "Black line indicates perfect performance")

# Plot B) Violin plot of absolute errors

ggplot(data = accuracy,

aes(x = model, y = ae, fill = model)) +

geom_violin() +

geom_jitter(width = .05, alpha = .2) +

labs(title = "Distributions of Fitting Absolute Errors",

subtitle = "Numbers indicate means",

x = "Model",

y = "Absolute Error") +

guides(fill = FALSE) +

annotate(geom = "label",

x = accuracy_agg$model,

y = accuracy_agg$mae,

label = round(accuracy_agg$mae, 2))Datasets

| File | Rows | Columns |

|---|---|---|

| college_train.csv | 1000 | 21 |

- The

college_traindata are taken from theCollegedataset in theISLRpackage. They contain statistics for a large number of US Colleges from the 1995 issue of US News and World Report.

Variable description of college_train

| Name | Description |

|---|---|

Private |

A factor with levels No and Yes indicating private or public university. |

Apps |

Number of applications received. |

Accept |

Number of applications accepted. |

Enroll |

Number of new students enrolled. |

Top10perc |

Pct. new students from top 10% of H.S. class. |

Top25perc |

Pct. new students from top 25% of H.S. class. |

F.Undergrad |

Number of fulltime undergraduates. |

P.Undergrad |

Number of parttime undergraduates. |

Outstate |

Out-of-state tuition. |

Room.Board |

Room and board costs. |

Books |

Estimated book costs. |

Personal |

Estimated personal spending. |

PhD |

Pct. of faculty with Ph.D.’s. |

Terminal |

Pct. of faculty with terminal degree. |

S.F.Ratio |

Student/faculty ratio. |

perc.alumni |

Pct. alumni who donate. |

Expend |

Instructional expenditure per student. |

Grad.Rate |

Graduation rate. |

Functions

Packages

| Package | Installation |

|---|---|

tidyverse |

install.packages("tidyverse") |

caret |

install.packages("caret") |

Functions

| Function | Package | Description |

|---|---|---|

trainControl() |

caret |

Define modelling control parameters |

train() |

caret |

Train a model |

predict(object, newdata) |

base |

Predict the criterion values of newdata based on object |

postResample() |

caret |

Calculate aggregate model performance in regression tasks |

confusionMatrix() |

caret |

Calculate aggregate model performance in classification tasks |

Resources

Cheatsheet

from github.com/rstudio